#gpuprogramming 검색 결과

PROGRAM YOUR GPU youtube.com/playlist?list=… #gpu #gpuprogramming #nvidia #intel #amd #graphics #computergraphics #dataviz #simulation #cudaeducation

🚀 Exciting Learning Opportunity! 🚀 For more details and registration: events.eurocc.lu/meluxina-intro… #GPUProgramming #CUDA #Supercomputing #ScientificComputing #MeluXina #Luxembourg

🔥 New Series! Learning GPU programming through Mojo puzzles - on an Apple M4! No expensive data center GPUs needed. No CUDA C++ complexity. Just Python-like syntax with systems performance. First video just dropped: youtube.com/watch?v=-VsP4k… #Mojo #GPUProgramming #AppleSilicon

youtube.com

YouTube

Learn GPU Programming with Mojo 🔥 GPU Puzzles Tutorial - Introduction

PROGRAM YOUR GPU youtube.com/playlist?list=… #gpu #gpuprogramming #nvidia #intel #amd #graphics #computergraphics #dataviz #simulation #cudaeducation

NSIGHT GRAPHICS TUTORIAL: youtu.be/LtretfoL2tc | Vulkan, OpenGL, Direct 3D profiling and debugging | #graphicsprogramming #gpuprogramming #gpgpu #howtoprogram #howtocode #computerprogramming #howtowriteaprogram #siliconvalley #cudaeducation

youtube.com

YouTube

Nsight Graphics Tutorial | Cuda Education

For maximum performance, firms often develop custom CUDA kernels. This involves writing low-level code to directly program the GPU's parallel cores, squeezing out every drop of efficiency for critical tasks. #CUDA #GPUProgramming

NSIGHT GRAPHICS TUTORIAL: youtu.be/LtretfoL2tc | Vulkan, OpenGL, Direct 3D profiling and debugging | #graphicsprogramming #gpuprogramming #gpgpu #howtoprogram #howtocode #computerprogramming #howtowriteaprogram #siliconvalley #cudaeducation

youtube.com

YouTube

Nsight Graphics Tutorial | Cuda Education

🚀 On-site Training: High-Level #GPU Programming, 14-16 Feb 2024, CSC Training Facilities. Master SYCL & Kokkos for CPU/GPU. Learn parallelism, memory management, ... Expert-led. Limited spots! Register before 07/02/2024. 💻👩💻 #LUMI Training #GPUProgramming @LUMI @VSCluster

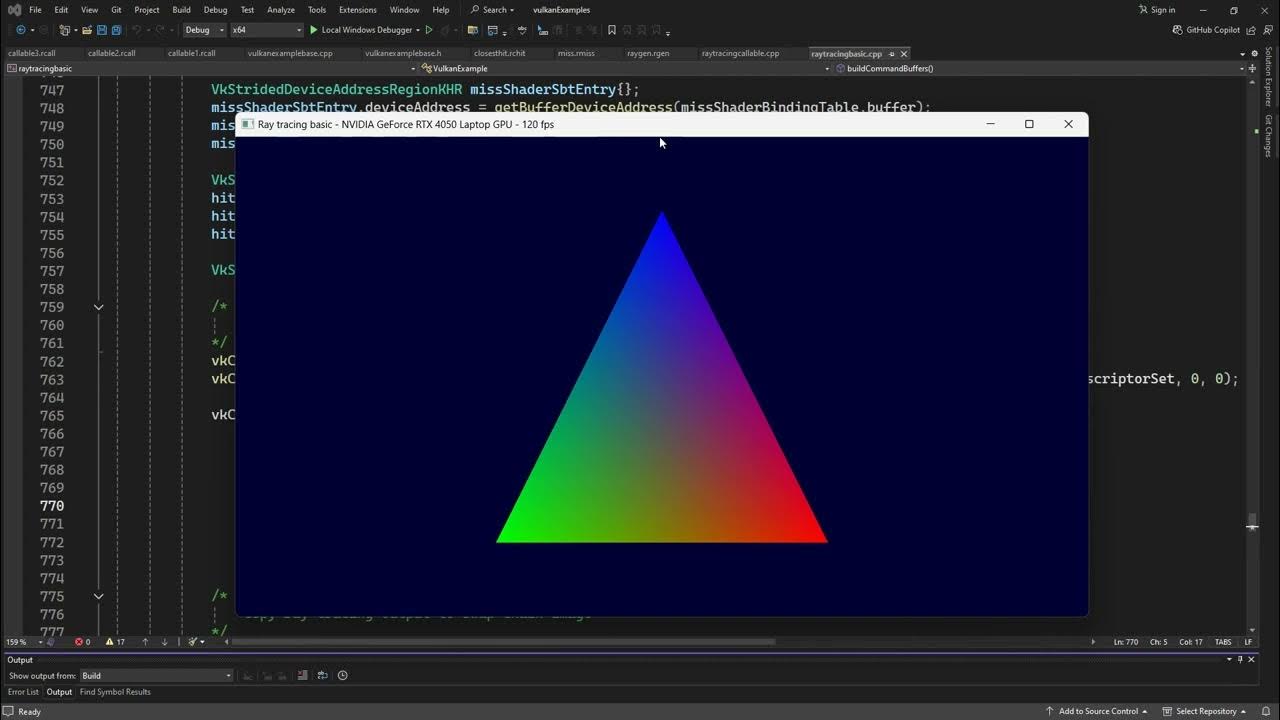

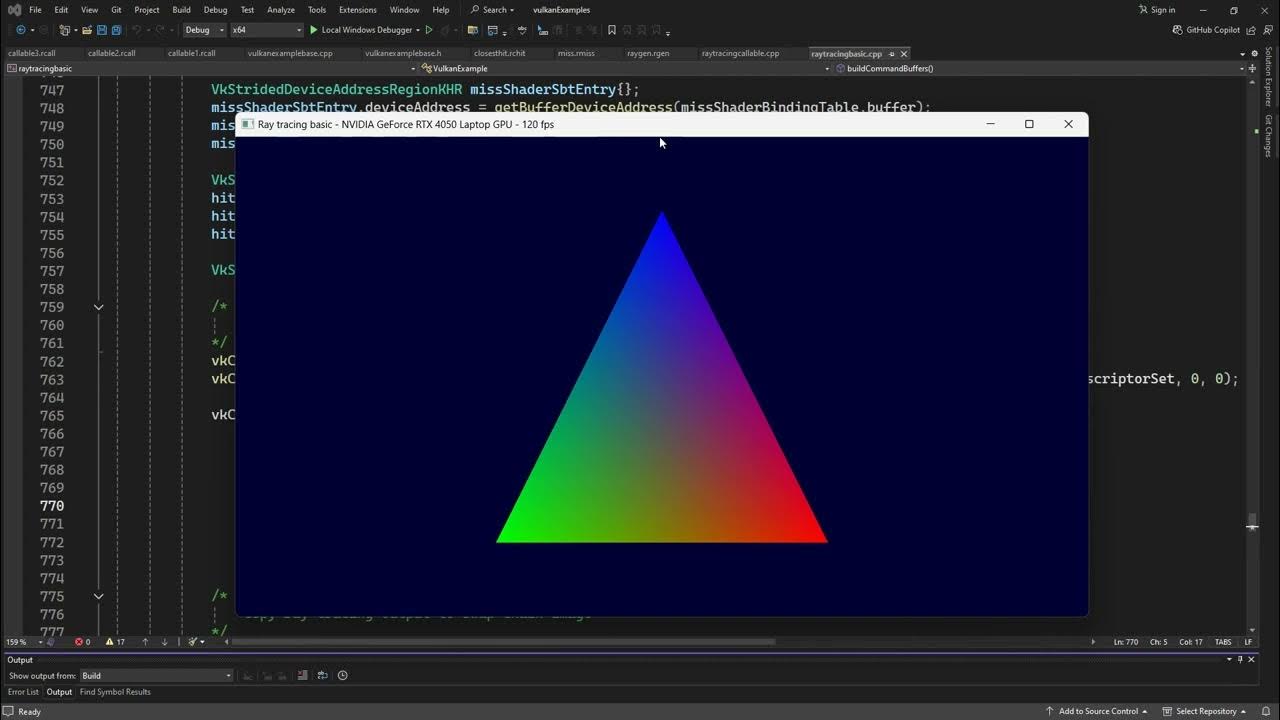

GPU PROGRAMMING | Callable Shader Binding Table | youtu.be/m1F6QBkgSts #gpu #gpuprogramming #programgpu #hpc #vulkan #vulkanapi #computergraphics #raytracing #nvidia #amd #intel #cudaeducation

youtube.com

YouTube

VULKAN API DISCUSSION | Ray Tracing 8.1 | Callable Shader Binding...

#TrainingTuesday focuses on Optimising HIP Kernels. 💻 HIP kernel performance is a multi-layered puzzle. 🧩 Unpack several strategies to boost your kernel performance, watch 👀: bit.ly/3WL8rSr #HIP #GPUprogramming #optimisation #training #education

GPU PROGRAMMING | Callable Shader Binding Table | youtu.be/m1F6QBkgSts #gpu #gpuprogramming #programgpu #hpc #vulkan #vulkanapi #computergraphics #raytracing #nvidia #amd #intel #cudaeducation

youtube.com

YouTube

VULKAN API DISCUSSION | Ray Tracing 8.1 | Callable Shader Binding...

COMPUTER GRAPHICS | Frustum culling equation to discard object that aren't relevant youtu.be/WmQPuaj_j4k #gpu #gpuprogramming #frustumculling #computergraphics #hpc #howtocode #howtoprogram #geometry #shader #intel #amd #nvidia #dataviz #computersimulation #cudaeducation

youtube.com

YouTube

Vulkan API | Frustrum Culling + LOD + Indirect Rendering PART 2 |...

Something went wrong.

Something went wrong.

United States Trends

- 1. Steph 33.6K posts

- 2. #TheLastDriveIn 4,810 posts

- 3. Warriors 76.4K posts

- 4. Caine 60.5K posts

- 5. TOP CALL 4,565 posts

- 6. Steve Kerr 1,754 posts

- 7. #SmackDown 39.2K posts

- 8. Podz 2,827 posts

- 9. AI Alert 2,567 posts

- 10. Peter Greene 2,044 posts

- 11. #Iced N/A

- 12. #MutantFam 2,459 posts

- 13. Market Focus 2,759 posts

- 14. Gobert 2,177 posts

- 15. #TADCEp7 21.7K posts

- 16. Jimmy Butler 1,377 posts

- 17. Hughes 38.9K posts

- 18. Seth Rogan N/A

- 19. Bedard 2,771 posts

- 20. Check Analyze N/A