#explainability 搜尋結果

We are thrilled to announce our latest paper: Unifying VXAI: A Systematic Review and Framework for the Evaluation of Explainable AI This work introduces a new perspective on how to test explanations. #XAI #Explainability arxiv.org/abs/2506.15408

@OBirTivin about to present “using #explainability for #bias mitigation” at @AcmIcmi. @heysemkaya @UUBeta

AI가 감으로 답할 때, @SentientAGI 는 근거로 설명한다. @SentientAGI @EdgenTech #AGI #Eval #Explainability

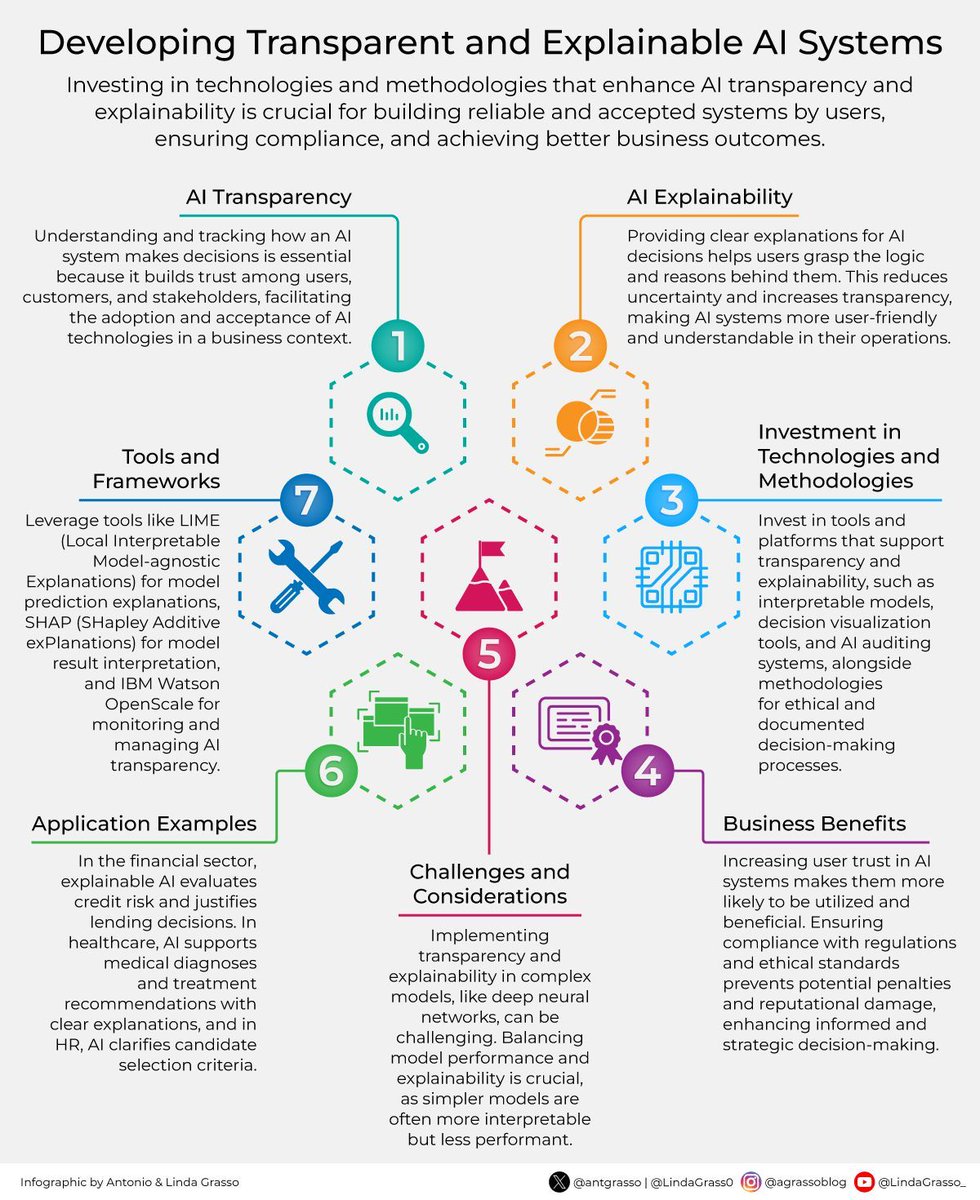

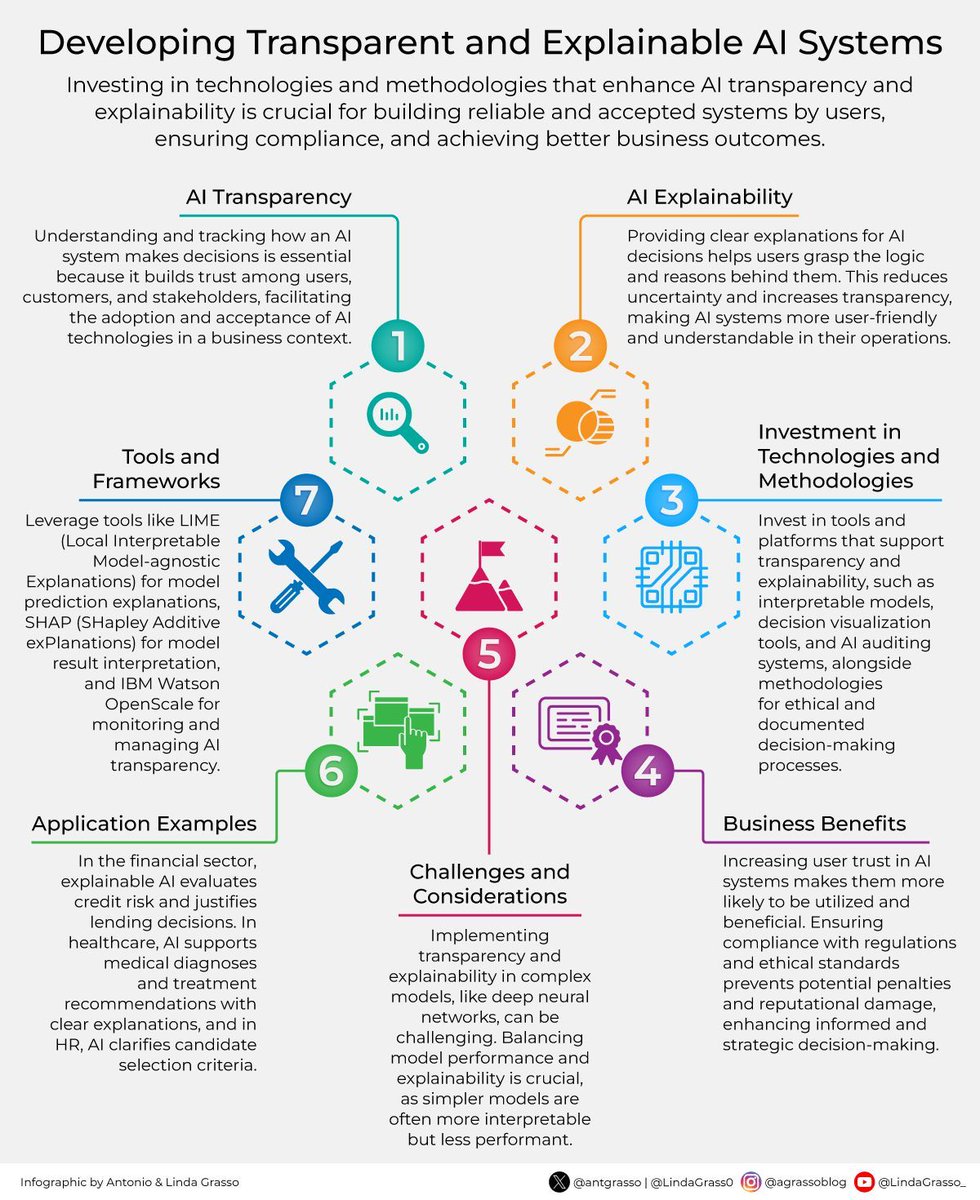

Investing in technologies and methodologies that enhance AI transparency and explainability is crucial for building reliable and accepted systems by users, ensuring compliance, and achieving better business outcomes. #AI #Transparency #Explainability

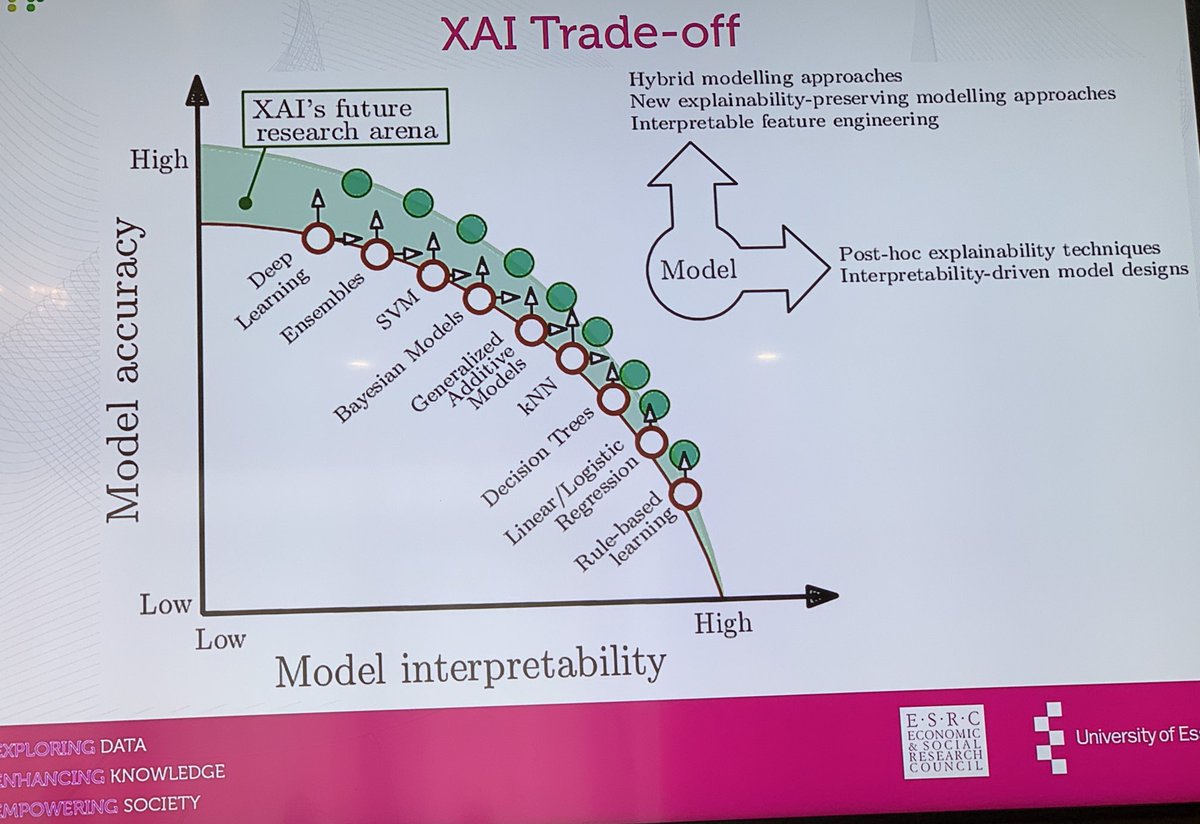

We can build trust in #AI through building #explainability in the systems @sagihaider explains @BLGDataResearch @EssexIADS @uni_essex_csee

Session 5 on #Explainability and #DecisionMaking chaired by @peterpaws is about to begin #UMAP2023 @UMAPconf

Check out this blog post to learn how to use D-RISE to explain object detection results for MATLAB, TensorFlow, and PyTorch models. #objectdetection #explainability #matlab #pytorch #tensorflow spr.ly/6012cVRYE

Excited to share our paper "Explainable Depression Symptom Detection in Social Media" 🎉 Huge thanks to @anxopvila and @jparapar for their guidance and support in achieving my first JCR publication 🙏 🔗link.springer.com/article/10.100… #MentalHealth #Explainability #OpenAccess

Check out our poster #ICSEC presented by Ms. Yasmin Abdelaal on “Exploring the Applications of #Explainability in #Wearable Data Analytics: A Systematic Literature Review” at the fourth International Computational Science and Engineering Conference (ICSEC) With Dr…

So excited with this new achievement on @researchgate reads! Our paper with @jasoneramirez (@unisevilla) and @DoloresRomeroM (@CBS_ECON) under @needs_project is attracting the attention of researchers interested in #Explainability in #MachineLearning Enjoy reading!

Evaluating explainability techniques on discrete-time graph neural networks Manuel Dileo, Matteo Zignani, Sabrina Tiziana Gaito. Action editor: Christopher Morris. openreview.net/forum?id=JzmXo… #explainability #temporal #gnns

How Important Is #Explainability in #Cybersecurity AI? buff.ly/459Fdzx @RWW #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

It was a pleasure for me to visit the Signal Processing-ICS lab at the Foundation for Research and Technology - Hellas (FORTH) this Monday and give a talk about Explainable Adaptation of Time Series Forecasting using Regions-of-Competence. #timeseries #explainability #forecasting

The Imperative of #Explainability in AI-Driven #Cybersecurity > scoop.it/topic/artifici… #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

The Imperative of #Explainability in AI-Driven #Cybersecurity > buff.ly/4gimnfr #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

I am happy to share that "Listenable Maps for Zero-Shot Audio Classifiers" has been accepted in #NeurIPS2024. The paper explores #explainability for #zeroshot classifiers. 📋 Paper: arxiv.org/pdf/2405.17615 The code will be released soon!

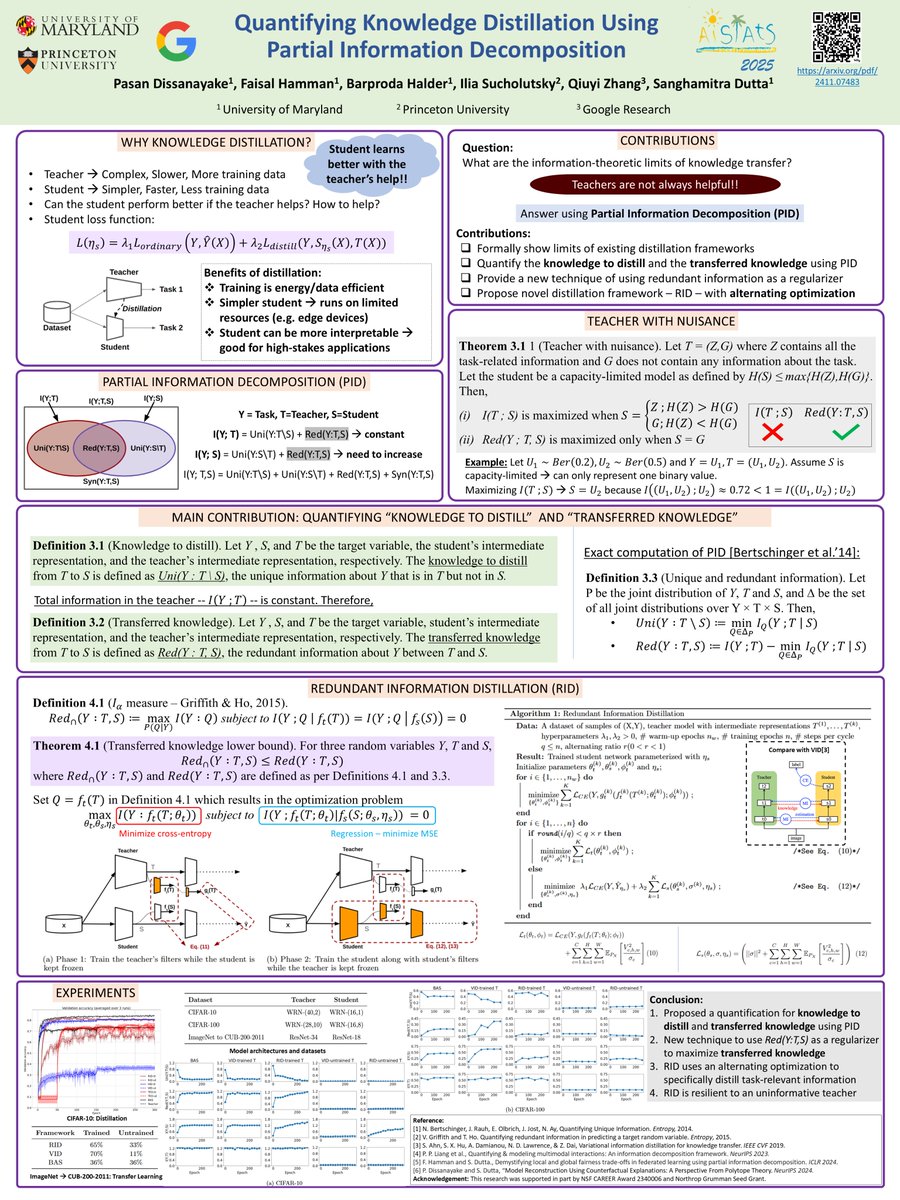

📢 Our paper "Quantifying Knowledge Distillation Using Partial Information Decomposition" will be presented at #AISTATS2025 on May 5th, Poster Session 3! Our work brings together #modelcompression and #explainability through the lens of #informationtheory Link:…

Do Concept Bottleneck Models Respect Localities? Naveen Janaki Raman, Mateo Espinosa Zarlenga, Juyeon Heo, Mateja Jamnik. Action editor: Jeremias Sulam. openreview.net/forum?id=4mCkR… #explainability #locality #concepts

데이터는 많고 맥락은 적던 날, @Mira_Network 가 노이즈를 걷어냈어. 올라가는 곡선 뒤에서 이유가 보이자, 너와 나의 내일도 또렷해졌지. @KaitoAI @EdgenTech #MiraNetwork #MLOps #Explainability

AI가 감으로 답할 때, @SentientAGI 는 근거로 설명한다. @SentientAGI @EdgenTech #AGI #Eval #Explainability

Enterprises will need to learn to prevent #autonomy from turning into #opacity. By embedding #explainability and #interpretability into the design of #autonomousAI systems, maintaining human oversight capabilities, and establishing clear #audittrails for all automated…

Example of the functioning of our Speech Concept Bottleneck Models #SCBM #XAI #explainability #interpretability #ML #AI #hatespeech #hate #counterspeech #toxic

In our new paper "Distilling knowledge from large language models: A #concept #bottleneck #model for #hate and #counterspeech recognition" we introduce Speech Concept Bottleneck Models (#SCBM) - a step toward #interpretable #LLM. 📄doi.org/10.1016/j.ipm.…

AI news highlights: ChatGPT Pulse launches, Gemini Robotics 1.5, Nvidia invests in data centers, new AI models enhance research. 👇 📖 t.me/ai_narrotor/22… 🎧 t.me/ai_narrotor/22… #AInews, #UserApplication, #Explainability

Evaluating explainability techniques on discrete-time graph neural networks Manuel Dileo, Matteo Zignani, Sabrina Tiziana Gaito. Action editor: Christopher Morris. openreview.net/forum?id=JzmXo… #explainability #temporal #gnns

3. Key to human-centric AI: •Tiered autonomy (different levels of control) •Explanations layered for users, devs, regulators •Feedback loops that actually adapt That’s how trust scales. #AI #Explainability #TrustInTech

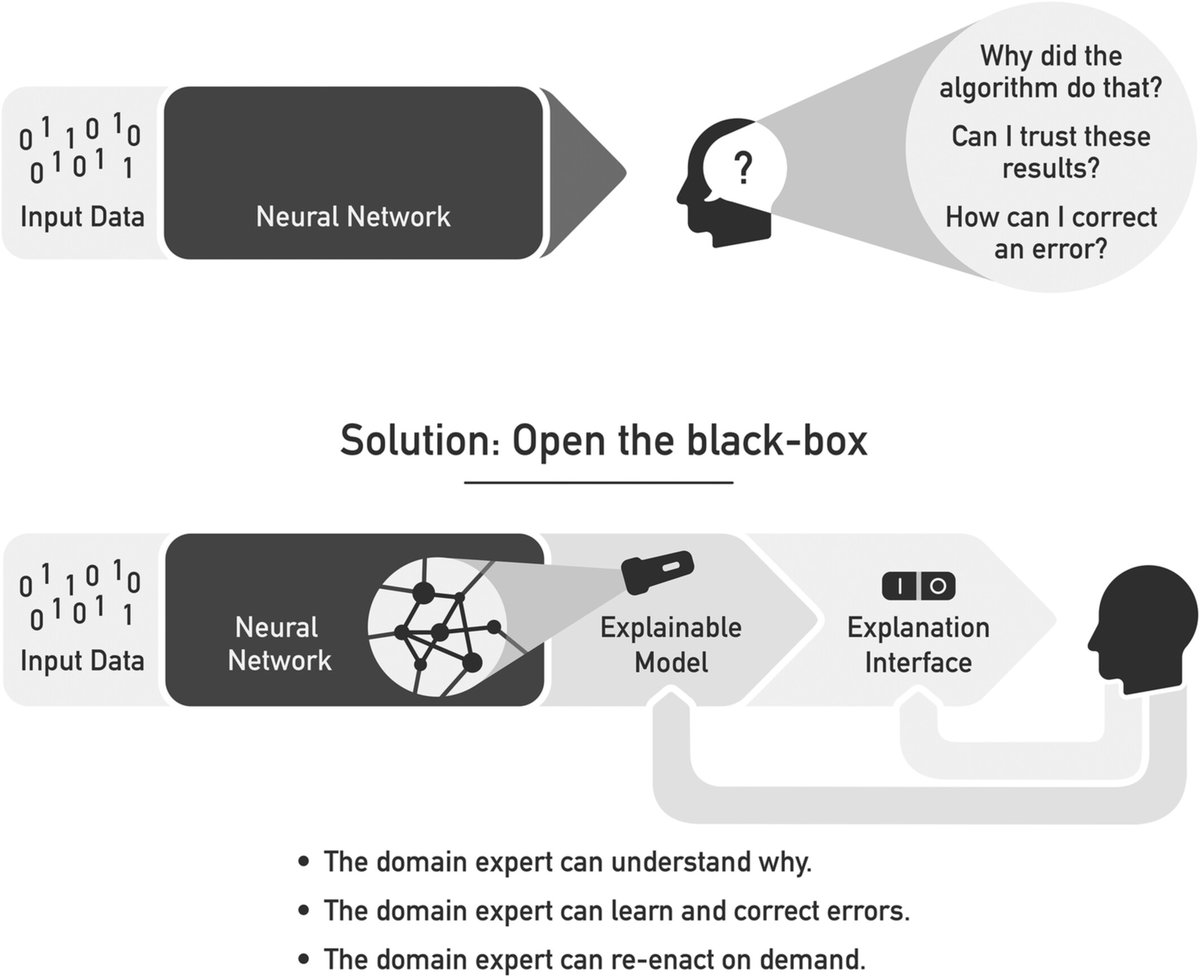

🤖 Interpretability = how a model works. 🧠 Explainability = why it made a decision. Together, they build trust & transparency in AI! 🔗linkedin.com/in/octogenex/r… 🔗instagram.com/ds_with_octoge… #AI365 #ML #Explainability #TrustInAI

Myth: AI is a black box nobody understands. ❓🤖 Fact: Explainable AI (XAI) developments mean doctors and patients can see how and why AI suggests treatments, increasing trust. ✅ #Explainability #TrustInTech

AI is an iterative technology. It's incremental value loops learn and deliver outcomes with each iteration. Implement #confidencethresholds for the desired outcomes. Ensure data provenance and decision audit trails. Use SHAP values, counterfactuals for #explainability.…

Trust in your systems will be the most important currency. #Explainability will be your best armour. #Reasoning chains will need to be documented; fairness constraints clarifies, #bias detection audits conducted. #Privacy-by-design will be a mandate. Include all this in…

AI's transformer architecture is enabling systems to reason - plan - act. However, legacy #infradebt remains a foundational choke. On top of that, opacity and model #drift can cascade into million-dollar blindspots. Lack of #explainability can erode trust. Ambiguous oversight…

Submit your #xAI in Finance manuscript to our workshop. We have lined up an exciting panelists and speakers. check out for more details : lnkd.in/g79-fmkK #AIinFinance #explainability #trustworthyai #responsibleai

Trust is a currency which will grow even more important in an AI-driven #strategyformulation world. #Explainability will be expected to demystify decisions. Reasoning chains will have to be documented. Fairness constraints and #bias detection patterns will be required. SHAP…

Transparency and explainability are the foundation of trust in medical AI. Every decision must remain open to audit and accountable to human judgment. #Transparency #Explainability #TrustInAI #EthicsInAI

🔍 Can we open the black box of LLMs? Two main paths: 1️⃣ Natural language explanations 2️⃣ Mechanistic interpretability Which brings us closer to real transparency? #AI #LLM #Explainability #Interpretability #MachineLearning #TrustworthyAI #WiAIRpodcast #WiAIR

Check out this blog post to learn how to use D-RISE to explain object detection results for MATLAB, TensorFlow, and PyTorch models. #objectdetection #explainability #matlab #pytorch #tensorflow spr.ly/6012cVRYE

How Important Is #Explainability in #Cybersecurity AI? > scoop.it/topic/artifici… #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

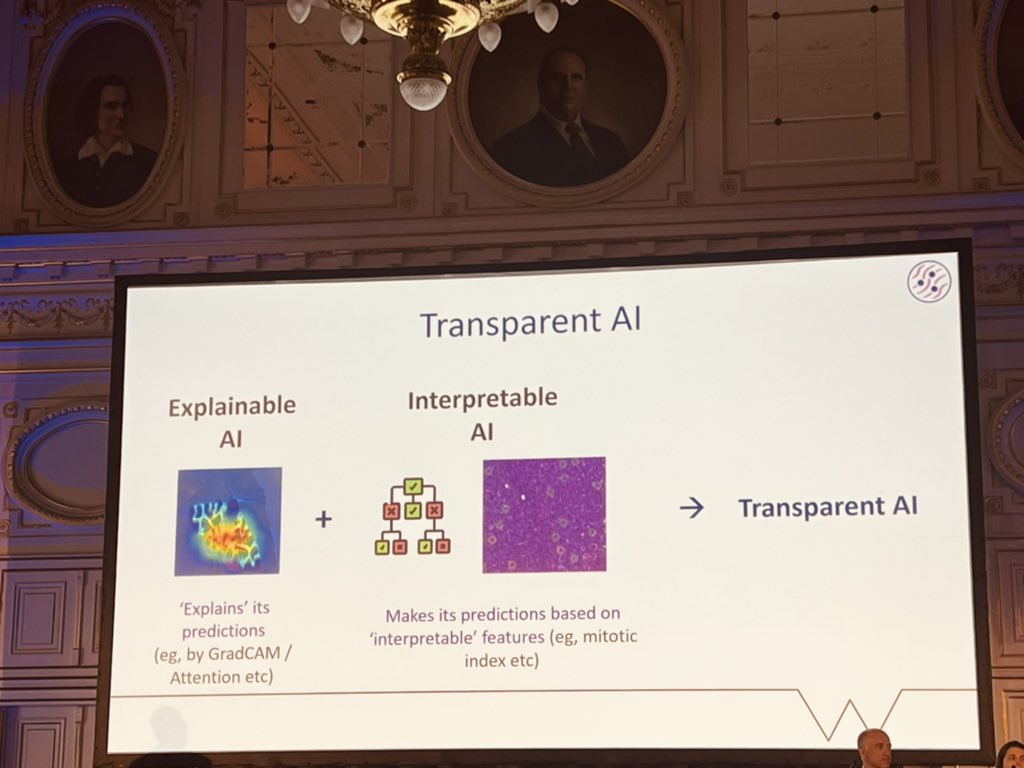

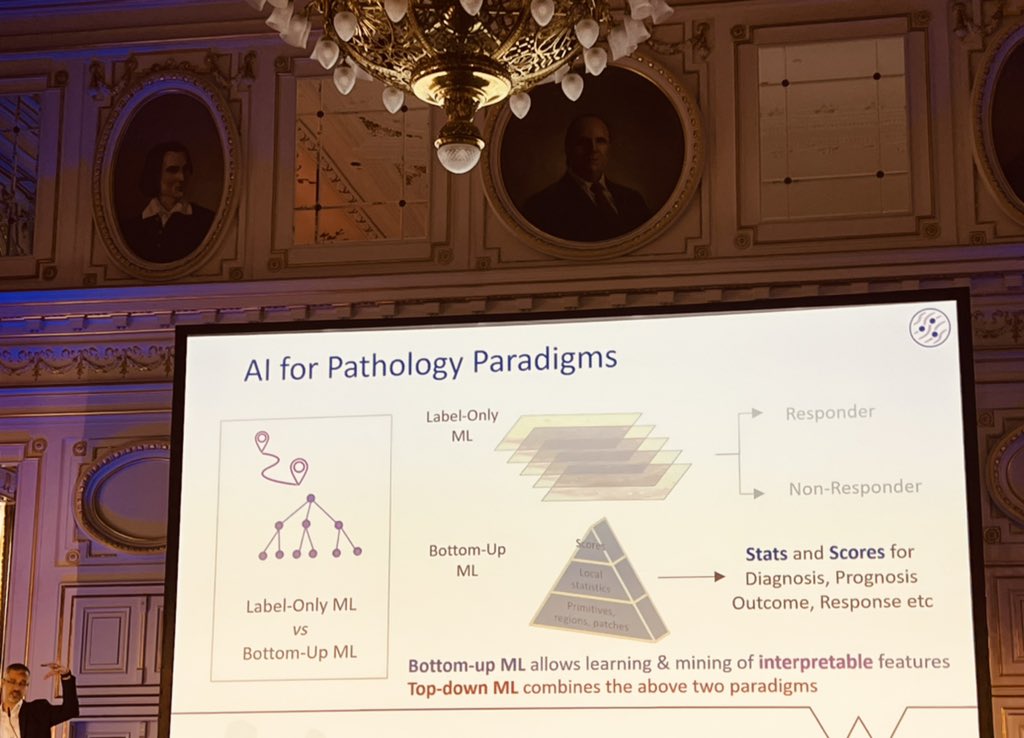

Hot off the press! our review: „#Explainability and causability in #digitalpathology“ doi.org/10.1002/cjp2.3… - led by colleagues at #EMPAIAAustria @MedUniGraz | #AI #xAI #pathology @TheEMPAIA

Excited to share our paper "Explainable Depression Symptom Detection in Social Media" 🎉 Huge thanks to @anxopvila and @jparapar for their guidance and support in achieving my first JCR publication 🙏 🔗link.springer.com/article/10.100… #MentalHealth #Explainability #OpenAccess

Learn how to apply best-in-class #explainability on state-of-the-art #GraphNeuralNetworks models in production in this ondemand webinar hubs.ly/Q021lz6-0

We are thrilled to announce our latest paper: Unifying VXAI: A Systematic Review and Framework for the Evaluation of Explainable AI This work introduces a new perspective on how to test explanations. #XAI #Explainability arxiv.org/abs/2506.15408

How Important Is #Explainability in #Cybersecurity AI? > buff.ly/3YOmDdW #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

The Imperative of #Explainability in AI-Driven #Cybersecurity > scoop.it/topic/artifici… #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

Hot off the press! our review: „#Explainability and causability in #digitalpathology“ doi.org/10.1002/cjp2.3… - led by colleagues at #EMPAIAAustria @MedUniGraz | #AI #xAI #pathology @TheEMPAIA

It was a pleasure for me to visit the Signal Processing-ICS lab at the Foundation for Research and Technology - Hellas (FORTH) this Monday and give a talk about Explainable Adaptation of Time Series Forecasting using Regions-of-Competence. #timeseries #explainability #forecasting

The Imperative of #Explainability in AI-Driven #Cybersecurity > buff.ly/4gimnfr #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

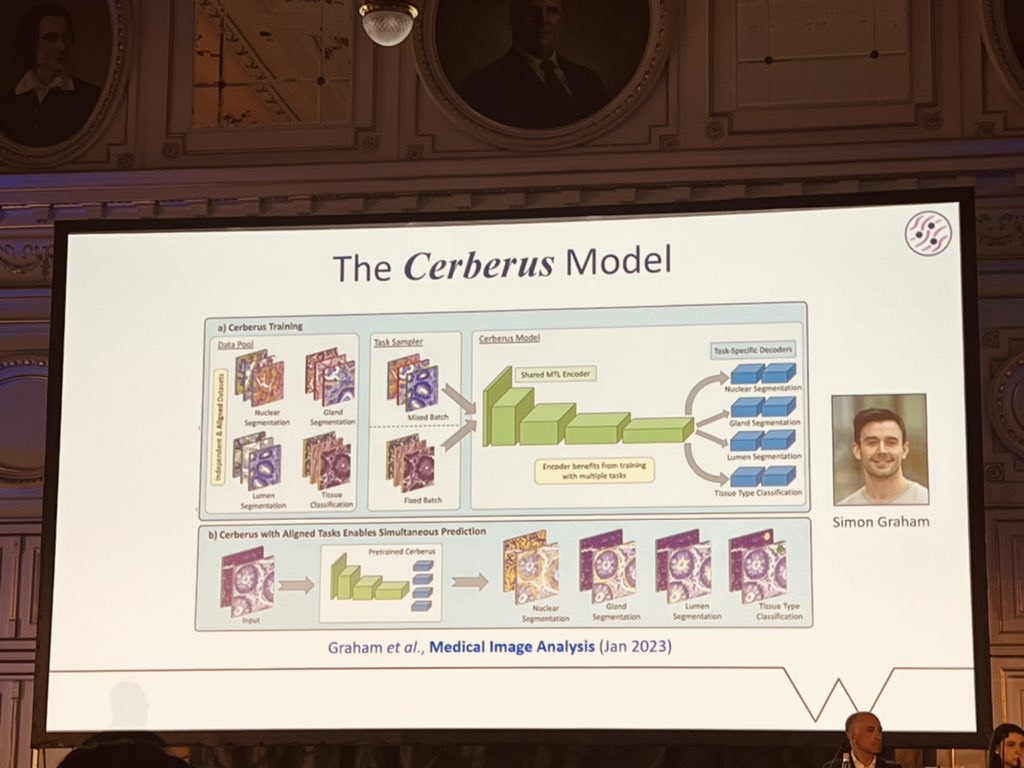

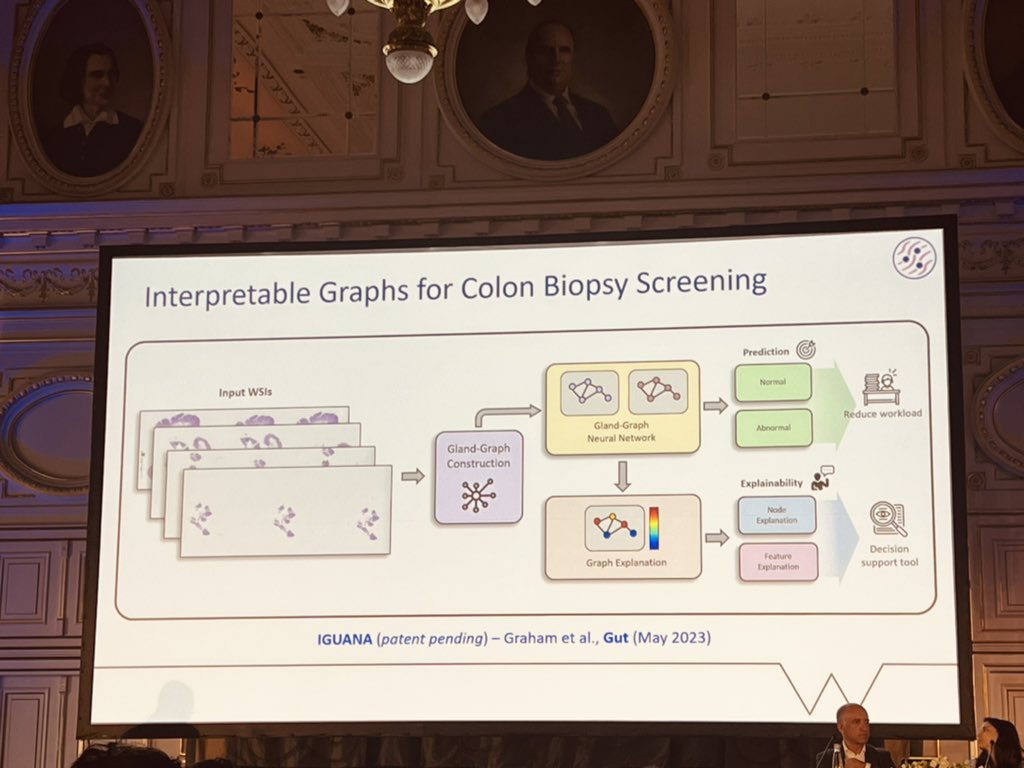

The very last shots from this great #ECDP2023, the last invited speaker Prof N. Rajpoot @nmrajpoot with a wonderful lecture on the concepts of #explainability and #interpretability of #AI applied to #Pathology well-explained through the interesting works of his excellent UK group

Excited about our latest research on using explainable ML to classify saggital gait patterns in cerebral palsy and gaining valuable insights from gait analysis data. Presented @ @GAMMA_in_Motion #MachineLearning #Explainability #CerebralPalsy #Research 📄doi.org/10.1016/j.gait…

Check out our poster #ICSEC presented by Ms. Yasmin Abdelaal on “Exploring the Applications of #Explainability in #Wearable Data Analytics: A Systematic Literature Review” at the fourth International Computational Science and Engineering Conference (ICSEC) With Dr…

MIT Taxonomy Helps Build #Explainability into the Components of #MachineLearning Models #ExplanableAI scitechdaily.com/mit-taxonomy-h…

@OBirTivin about to present “using #explainability for #bias mitigation” at @AcmIcmi. @heysemkaya @UUBeta

How Important Is #Explainability in #Cybersecurity AI? buff.ly/459Fdzx @RWW #tech #security #infosec #explainableAI #ArtificialIntelligence #digital #innovation #cyberthreats #cyberattacks #business #leaders #leadership #management #CISO #CIO #CTO #CDO

#AI #Explainability is an important topic within @TheEMPAIA ecosystem. A recent workshop by @zvki_de with contribution from #EMPAIA’s Christian Geißler (@DAI_Labor) has published a paper with 5 conclusions on #xAI in medicine (article in German): zvki.de/zvki-exklusiv/…

Using #counterfactuals to improve #explainability? Thanks to David Martens for the amazing talk! #databeers #brussels

Investing in technologies and methodologies that enhance AI transparency and explainability is crucial for building reliable and accepted systems by users, ensuring compliance, and achieving better business outcomes. #AI #Transparency #Explainability

Something went wrong.

Something went wrong.

United States Trends

- 1. Jets 103K posts

- 2. Justin Fields 20.3K posts

- 3. James Franklin 35.8K posts

- 4. Drake Maye 10K posts

- 5. Broncos 43.9K posts

- 6. Aaron Glenn 8,518 posts

- 7. Penn State 49.7K posts

- 8. Puka 6,977 posts

- 9. Derrick Henry 2,093 posts

- 10. George Pickens 3,831 posts

- 11. Steelers 39.7K posts

- 12. Cooper Rush 1,779 posts

- 13. #RavensFlock 1,623 posts

- 14. Saints 44.6K posts

- 15. Cam Little N/A

- 16. Tyler Warren 1,999 posts

- 17. London 204K posts

- 18. Sean Payton 3,647 posts

- 19. Boutte 2,280 posts

- 20. TMac 1,665 posts