#parallelcomputing 搜索结果

The Parallel Computing Market is propelled by the emergence of adaptive load balancing algorithms that optimize execution. Companies are focusing on increasing the reach of their products and services. Study insights @ is.gd/FhkIgT #GVR #Parallelcomputing #research

Had a blast with my parallel computing class visiting the campus cluster! 😄 One student opted out of the pic, so we got creative and replaced them with an AI-generated person. Can you guess who? Btw, this is half of the class, couldn't fit all in one pic. #ParallelComputing

The Future of #DataScience and #ParallelComputing! #BigData #Analytics #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #ReactJS #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode geni.us/Future-of--DSci

There is a theoretical maximum speed in concurrent programming. 💻Amdahl's Law says that if 50% of code is parallelizable then there is 2x max speedup. 📈95% parallelizable code gives 20x. #TechTalk #ParallelComputing #SoftwareEngineering

#Article 📜 Scalable O(log2n) Dynamics Control for Soft Exoskeletons by Julian Colorado, et al. mdpi.com/2076-0825/13/1… @UniJaveriana @MDPIEngineering #softexoskeletons #parallelcomputing #embeddedsystems #HIL #dynamicscontrol

David A. Padua from the @UofIllinois has been named the 2024 Ken Kennedy Award recipient for his contributions to the theory and practice of parallel compilation and tools, as well as outstanding mentorship and community service. #IEEE #ParallelComputing bit.ly/3Batg2i

I warmly recommend those two books to all #gamedev wanting to dive deep into #parallelcomputing . I've read them years ago, and they were of great help. I'm currently "rethinking" some #procedural algorithms using the parallelism paradygm.

Pi cluster 😍🤨 @thetechguymike #RaspberryPiCluster #ClusterComputing #ParallelComputing #DistributedSystems #HighPerformanceComputing #TechProjects #DIYCluster #Homelab #RaspberryPiProjects #ClusterNetworking

RT Use Python to Download Multiple Files (or URLs) in Parallel #datascience #python #parallelcomputing #programming dlvr.it/SvqKFN

RT Supercharge Your Python Asyncio With Aiomultiprocess: A Comprehensive Guide #parallelcomputing #pythontoolbox #concurrency #python #datascience dlvr.it/SrjnYS

RT Vectorize and Parallelize RL Environments with JAX: Q-learning at the Speed of Light⚡ dlvr.it/SxTHL8 #parallelcomputing #jax #python #reinforcementlearning

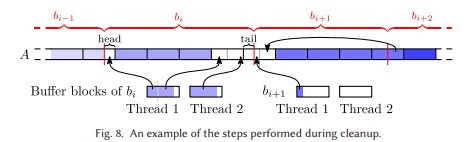

Read "Engineering In-place (Shared-memory) Sorting Algorithms," by Michael Axtmann, Sascha Witt, Daniel Ferizovic, Peter Sanders in ACM Transactions on Parallel Computing (TOPC), which received its first Impact Factor: bit.ly/3RUcR8o #parallelcomputing #impactfactor

Got TornadoVM installed on my PC, and executed one of the built-in test cases. It showed a 1100 x speedup of an 2048 x 2048 matrix multiplication - by running it on an Nvidia RTX 3080Ti (laptop) - over running it on the CPU (Intel i9 12900H) ! @tornadovm #Java #parallelcomputing

Guide to Parallel Computing with Julia: Learn about concurrency, #multithreading macros, and more. Watch the video here juliahub.com/company/resour… #JuliaLang #ParallelComputing

💡 Invitation to @EuroCC_Czechia courses in autumn: → Basic #QuantumComputing.. events.it4i.cz/event/188/ → Mastering Transformers.. events.it4i.cz/event/191/ → #DataParallelism.. events.it4i.cz/event/195/ → #ParallelComputing with #MATLAB.. events.it4i.cz/event/193/

1/ Parallel computing can greatly speed up your R code, especially when working with large datasets or computationally intensive tasks. Luckily, there are several R packages that make parallel computing easier than ever👇 #Rstats #ParallelComputing

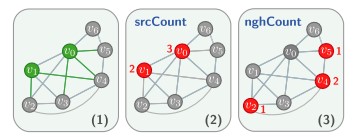

Read "Theoretically Efficient Parallel Graph Algorithms Can Be Fast and Scalable," by Laxman Dhulipala, Guy E. Blelloch, Julian Shun in ACM Transactions on Parallel Computing (TOPC), which received its first Impact Factor: bit.ly/3tsNv7x #parallelcomputing #research

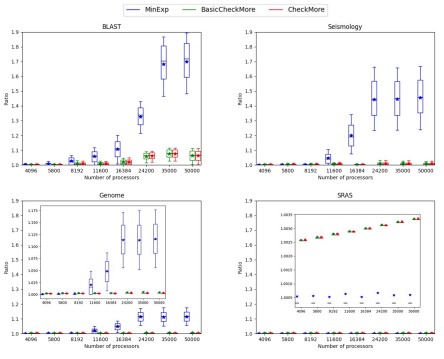

Check out "Checkpointing Workflows à la Young/Daly Is Not Good Enough," by Anne Benoit, Luca Perotin, Yves Robert, Hongyang Sun in ACM Transactions on Parallel Computing (TOPC), received its first Impact Factor: bit.ly/3QfSDFe #parallelcomputing #impactfactor

Parallel Computing Works! freecomputerbooks.com/Parallel-Compu… Look for "Read and Download Links" section to download. Follow me if you like this post. #SoftwareEngineering #ParallelComputing #ParallelProgramming #GridComputing

Designing and Building Parallel Programs: Concepts and Tools for Parallel Software Engineering - freecomputerbooks.com/Designing-and-… Look for "Read and Download Links" section to download. Follow me if you like this #SoftwareEngineering #ParallelComputing #ParallelProgramming #GridComputing

Something went wrong.

Something went wrong.

United States Trends

- 1. Indiana 88.1K posts

- 2. Mendoza 33.7K posts

- 3. Ohio State 52.4K posts

- 4. #UFC323 63.9K posts

- 5. Curt Cignetti 6,486 posts

- 6. Ryan Day 6,933 posts

- 7. Bama 77.9K posts

- 8. Heisman 16K posts

- 9. Roach 22.3K posts

- 10. Manny Diaz 1,747 posts

- 11. #iufb 6,993 posts

- 12. Virginia 45.3K posts

- 13. The ACC 30K posts

- 14. #Big10Championship 2,058 posts

- 15. Sayin 89.1K posts

- 16. Miami 279K posts

- 17. Fielding 7,705 posts

- 18. Buckeyes 10.3K posts

- 19. Cejudo 10.4K posts

- 20. James Madison 4,544 posts