Zhongyu Li

@ZhongyuLi4

Assist. Prof@CUHK, PhD@UC Berkeley. Doing dynamic robotics + AI. Randomly post robot & cat things here.

내가 좋아할 만한 콘텐츠

Excited to share that I’ve recently joined the Chinese University of Hong Kong (CUHK) as an Assistant Professor in Mechanical and Automation Engineering! My research will continue to focus on embodied AI & humanoid robotics — legged locomotion, whole-body and dexterous…

I've been working on deformable object manipulation since my PhD. It was totally a nightmare years ago and my PhD advisor was telling me not to work on it for my own good. Today, at ByteDance Seed, we are dropping GR-RL, a new VLA+RL system that manages long-horizon precise…

MimicKit now supports #IsaacLab! After many years with IsaacGym, it's time to upgrade. MimicKit has a simple Engine API that allows you to easily swap between different simulator backends. Which simulator would you like to see next?

mjlab now supports explicit actuators with custom torque computation in Python/PyTorch. This includes DC motor models with realistic torque-speed curves and learned actuator networks: github.com/mujocolab/mjla…

Pixels in, contacts out... Perception, interaction, autonomy - next agenda for humanoids. We learn a multi-task humanoid world model from offline datasets and use MPC to plan contact-aware behaviors from ego-vision in the real-world. Project and Code: ego-vcp.github.io

MimicKit now has support for motion retargeting with GMR. We also released a bunch of parkour motions recorded from a professional athlete, used in ADD and PARC. Anyone brave enough to deploy a double kong on a G1? 😉

Ever wanted to simulate an entire house in MuJoCo or a very cluttered kitchen? Well now you can with the newly introduced sleeping islands: groups of stationary bodies that drop out of the physics pipeline until disturbed. Check out Yuval's amazing video and documentation 👇

MuJoCo now supports sleeping islands! youtu.be/vct493lGQ8Q mujoco.readthedocs.io/en/latest/comp…

youtube.com

YouTube

Sleeping islands in MuJoCo

Unlimited scenario for dexterous manipulation for unlimited data🤩

😮💨🤖💥 Tired of building dexterous tasks by hand, collecting data forever, and still fighting with building the simulator environment? Meet GenDexHand — a generative pipeline that creates dex-hand tasks, refines scenes, and learns to solve them automatically. No hand-crafted…

Excited to share our new work on making VLAs omnimodal — condition on multiple different modalities (one at a time or all at once)! It allows us to train on more data than any single-modality model, and outperforms any such model: more modalities = more data = better models! 🚀…

We trained OmniVLA, a robotic foundation model for navigation conditioned on language, goal poses, and images. Initialized with OpenVLA, it leverages Internet-scale knowledge for strong OOD performance. Great collaboration with @CatGlossop, @shahdhruv_, and @svlevine.

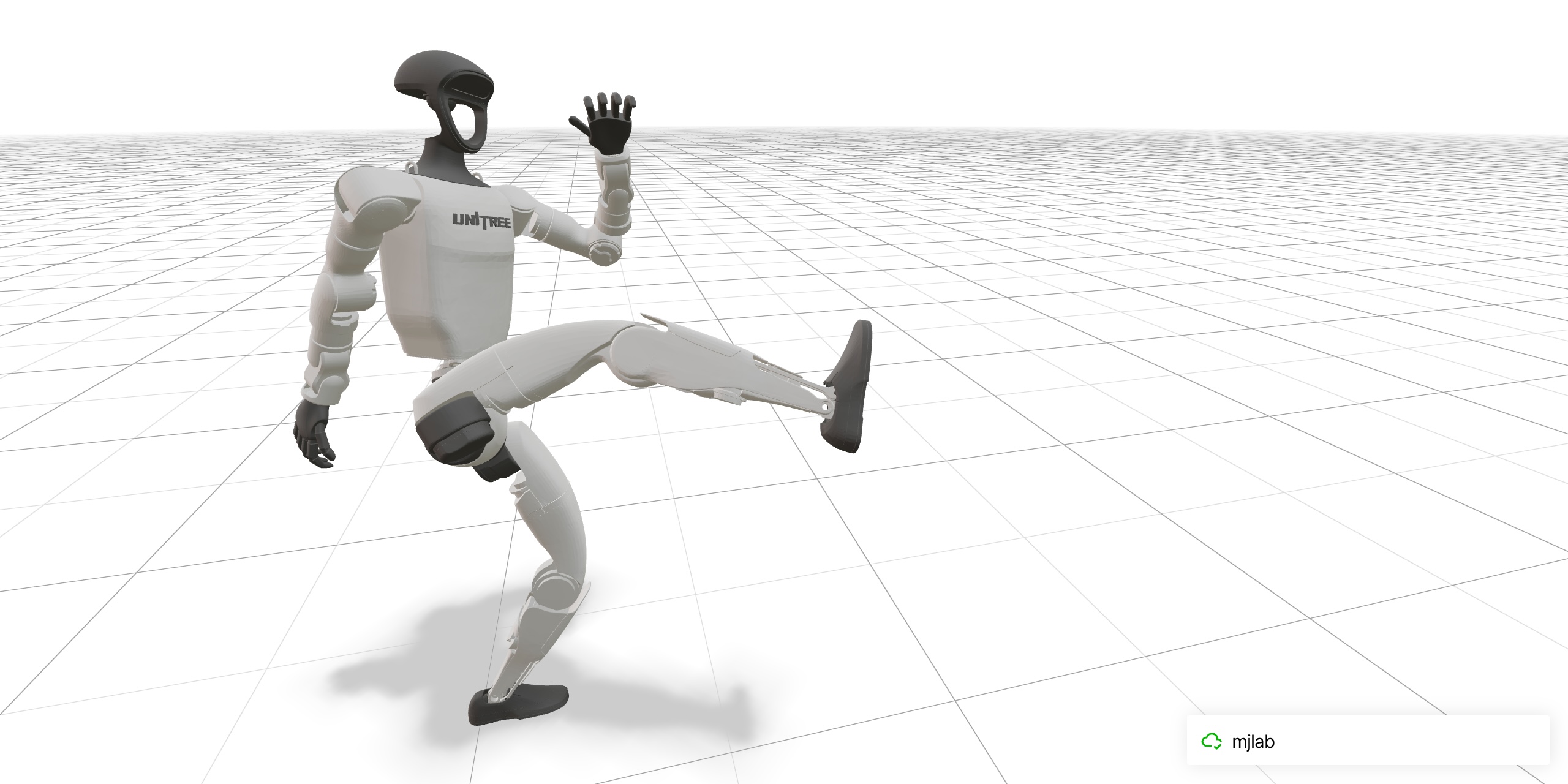

We open-sourced the full pipeline! Data conversion from MimicKit, training recipe, pretrained checkpoint, and deployment instructions. Train your own spin kick with mjlab: github.com/mujocolab/g1_s…

Amazing results! Such motion tracking policies can be trivially trained using our open-source code: github.com/HybridRobotics…

Unitree G1 Kungfu Kid V6.0 A year and a half as a trainee — I'll keep working hard! Hope to earn more of your love🥰

It was a joy bringing Jason’s signature spin-kick to life on the @UnitreeRobotics G1. We trained it in mjlab with the BeyondMimic recipe but had issues on hardware last night (the IMU gyro was saturating). One more sim-tuning pass and we nailed it today. With @qiayuanliao and…

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! github.com/xbpeng/MimicKit A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

Training RL agents often requires tedious reward engineering. ADD can help! ADD uses a differential discriminator to automatically turn raw errors into effective training rewards for a wide variety of tasks! 🚀 Excited to share our latest work: Physics-Based Motion Imitation…

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! github.com/xbpeng/MimicKit A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

Humanoid motion tracking performance is greatly determined by retargeting quality! Introducing 𝗢𝗺𝗻𝗶𝗥𝗲𝘁𝗮𝗿𝗴𝗲𝘁🎯, generating high-quality interaction-preserving data from human motions for learning complex humanoid skills with 𝗺𝗶𝗻𝗶𝗺𝗮𝗹 RL: - 5 rewards, - 4 DR…

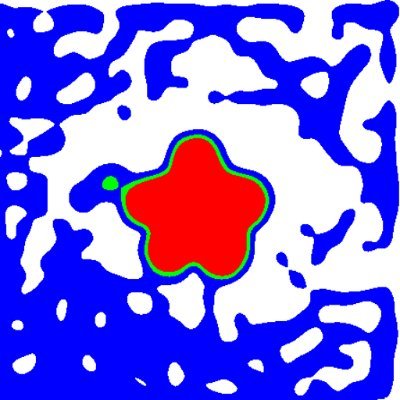

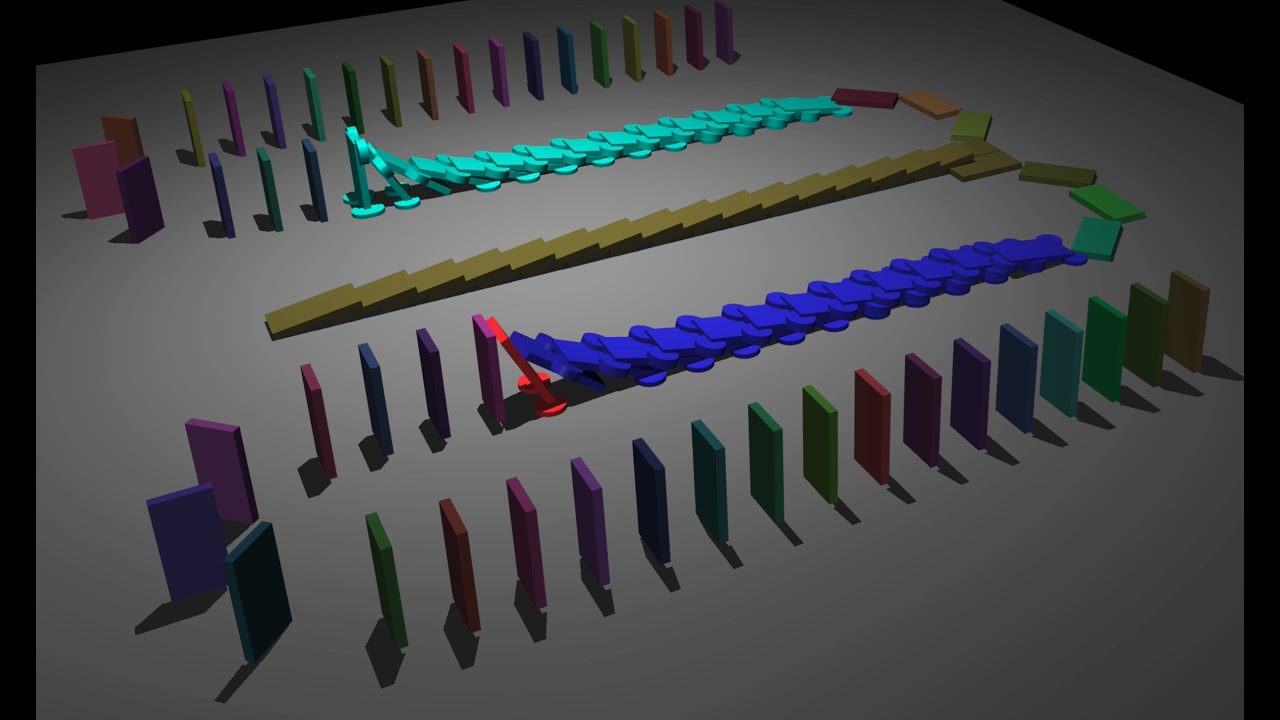

This is how the generated terrains were laid out for training the motion tracker in PARC with Isaac Gym 😱. It was good enough for the scope of the paper but it could definitely be much more compact with a bit of engineering effort!

@kevin_zakka dropping some high quality software as usual! I've been trying to pick a framework recently for some upcoming projects and this just made my decision a lot harder - so much new activity in this space! Here is a (simplified) overview of the options:

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

United States 트렌드

- 1. #AEWDynamite 20.3K posts

- 2. #TusksUp N/A

- 3. Giannis 78K posts

- 4. #DMDCHARITY2025 267K posts

- 5. #TheChallenge41 2,123 posts

- 6. Ryan Leonard N/A

- 7. #Survivor49 2,776 posts

- 8. Skyy Clark N/A

- 9. Jamal Murray 6,509 posts

- 10. Claudio 29.2K posts

- 11. Hannes Steinbach N/A

- 12. Steve Cropper 5,533 posts

- 13. Diddy 74K posts

- 14. Will Wade N/A

- 15. Yeremi N/A

- 16. Ryan Nembhard 3,625 posts

- 17. Earl Campbell 2,134 posts

- 18. Klingberg N/A

- 19. Kevin Overton N/A

- 20. Hilux 6,239 posts

내가 좋아할 만한 콘텐츠

-

Chenhao Li @ NeurIPS 2025

Chenhao Li @ NeurIPS 2025

@breadli428 -

Danfei Xu

Danfei Xu

@danfei_xu -

Andy Zeng

Andy Zeng

@andyzengineer -

Yilun Du @ NeurIPS 2025

Yilun Du @ NeurIPS 2025

@du_yilun -

Lerrel Pinto

Lerrel Pinto

@LerrelPinto -

Michael Posa

Michael Posa

@MichaelAPosa -

Christopher Agia

Christopher Agia

@agiachris -

Model-Based Optimization

Model-Based Optimization

@TCOptRob -

Vikash Kumar ✈️ NeurIPS

Vikash Kumar ✈️ NeurIPS

@Vikashplus -

Yifeng Zhu

Yifeng Zhu

@yifengzhu_ut -

Kay - Liyiming Ke

Kay - Liyiming Ke

@xkelym -

Antonio Loquercio

Antonio Loquercio

@antoniloq -

Tao Chen

Tao Chen

@taochenshh -

Bam4d

Bam4d

@Bam4d -

Glen Berseth

Glen Berseth

@GlenBerseth

Something went wrong.

Something went wrong.