Stanford NLP Group

@stanfordnlp

Computational Linguists—Natural Language—Machine Learning @chrmanning @jurafsky @percyliang @ChrisGPotts @tatsu_hashimoto @MonicaSLam @Diyi_Yang @StanfordAILab

You might like

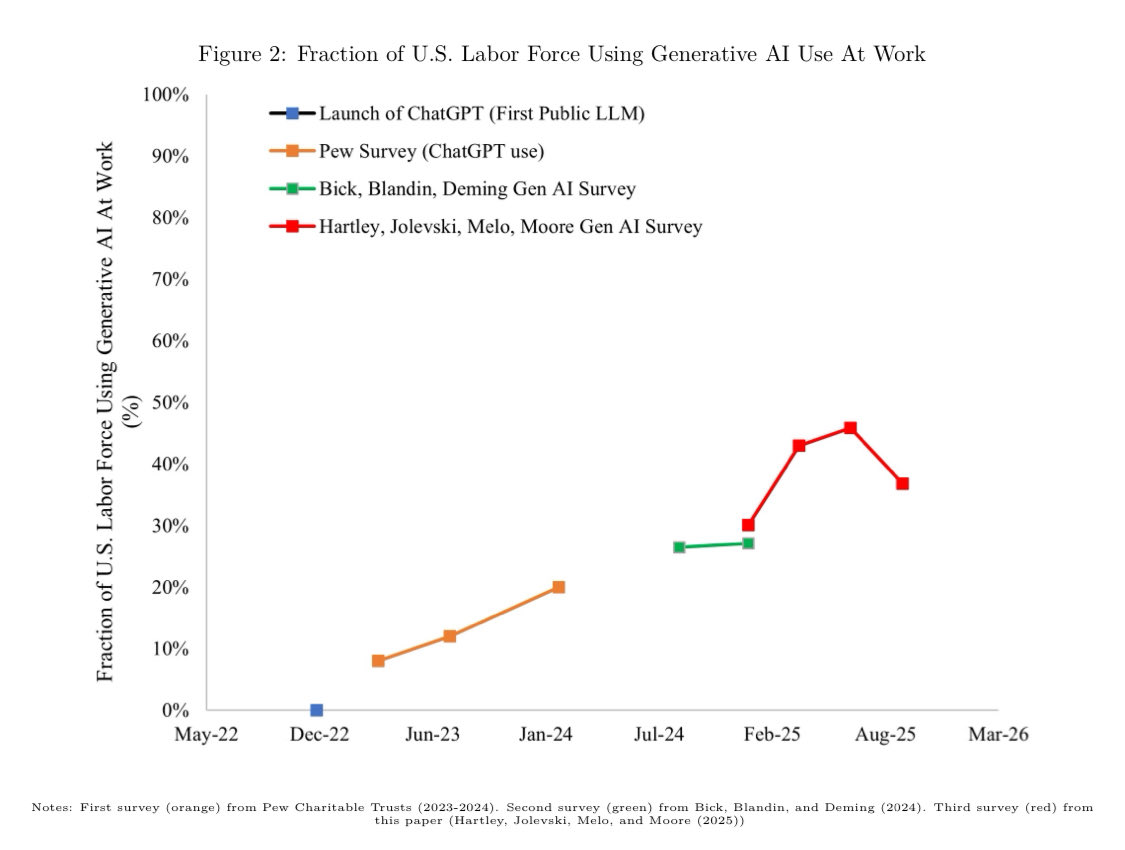

Hartley, Jolevski, Melo, and Moore have been tracking GenAI use for Americans at work since December 2024. They find that GenAI use fell to 36.7% of survey respondents in September 2025 from 45.6% in June. I wonder if OpenAI, Google or Anthropic are seeing a similar decline…

🚨We found that Generative AI use has fallen to 36.7% as of September 2025 falling from 45.6% as of June 2025 but still up from 30.1% as of December 2024. This is consistent with other census data finding a recent drop among firm adoption

That's fair. He hedges on whether he considers meaning in that sense to be part of language, but he does seem to be allowing that it could be excluded from his distributional analyses. However, if I were a student of Harris, I would want to push this as far as possible:

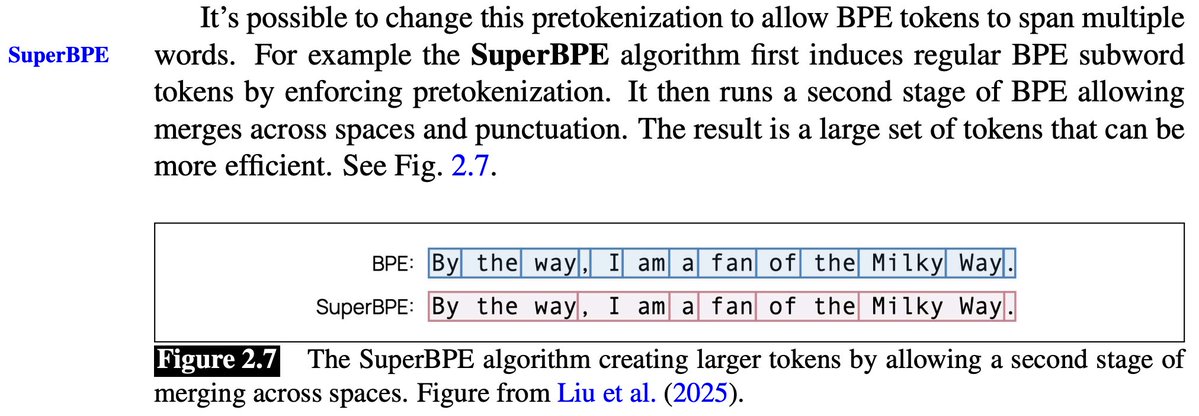

So happy to see SuperBPE mentioned in the latest edition of Jurafsky & Martin’s NLP textbook!!🥹 The whole chapter is a great read, covering challenges of defining a "word", background on Unicode & UTF8, and how we landed on subwords. 📖 web.stanford.edu/~jurafsky/slp3

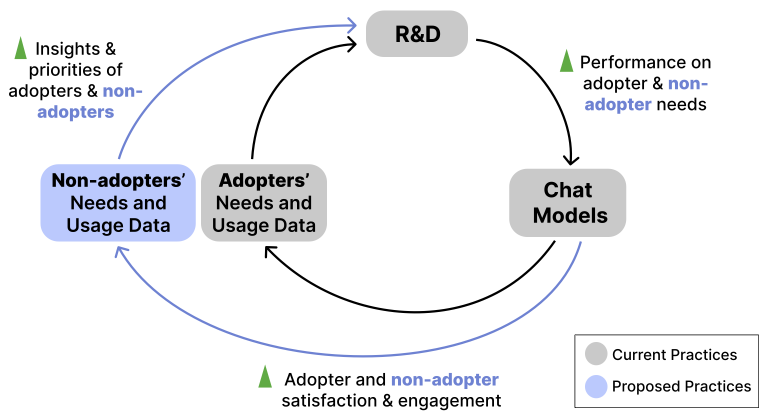

As of June 2025, 66% of Americans have never used ChatGPT. Our new position paper, Attention to Non-Adopters, explores why this matters: LLM research is being shaped around adopters, leaving non-adopters’ needs and key research opportunities behind. arxiv.org/abs/2510.15951

Want to level up your AI game? Stanford University released their entire process for building AI models completely open sourced: “Language models serve as the cornerstone of modern natural language processing (NLP) applications and open up a new paradigm of having a single…

Join us all day today for the #ICCV2025 Artificial Social Intelligence workshop! Fantastic line up of speakers this year: Evonne Ng, @tianminshu, @hyunw_kim, @Diyi_Yang, @TakayamaLeila, @Michael_J_Black. Not to be missed!

The #ICCV2025 Artificial Social Intelligence Workshop will be a full-day event on Sunday, 10/19 in Room 317B Join us to discuss social reasoning, multimodality, and embodiment in socially-intelligent AI agents!

For NLP (from zero), CS224N from Stanford is best

An old friend working at a big-3 frontier AI lab asked me recently about their agenda to create research agents that could do research as good as (or better than) grads or faculty. My reply was essentially similar to @karpathy 's : please work on an agent that can boost my…

My pleasure to come on Dwarkesh last week, I thought the questions and conversation were really good. I re-watched the pod just now too. First of all, yes I know, and I'm sorry that I speak so fast :). It's to my detriment because sometimes my speaking thread out-executes my…

Calm scientists vs. hype?

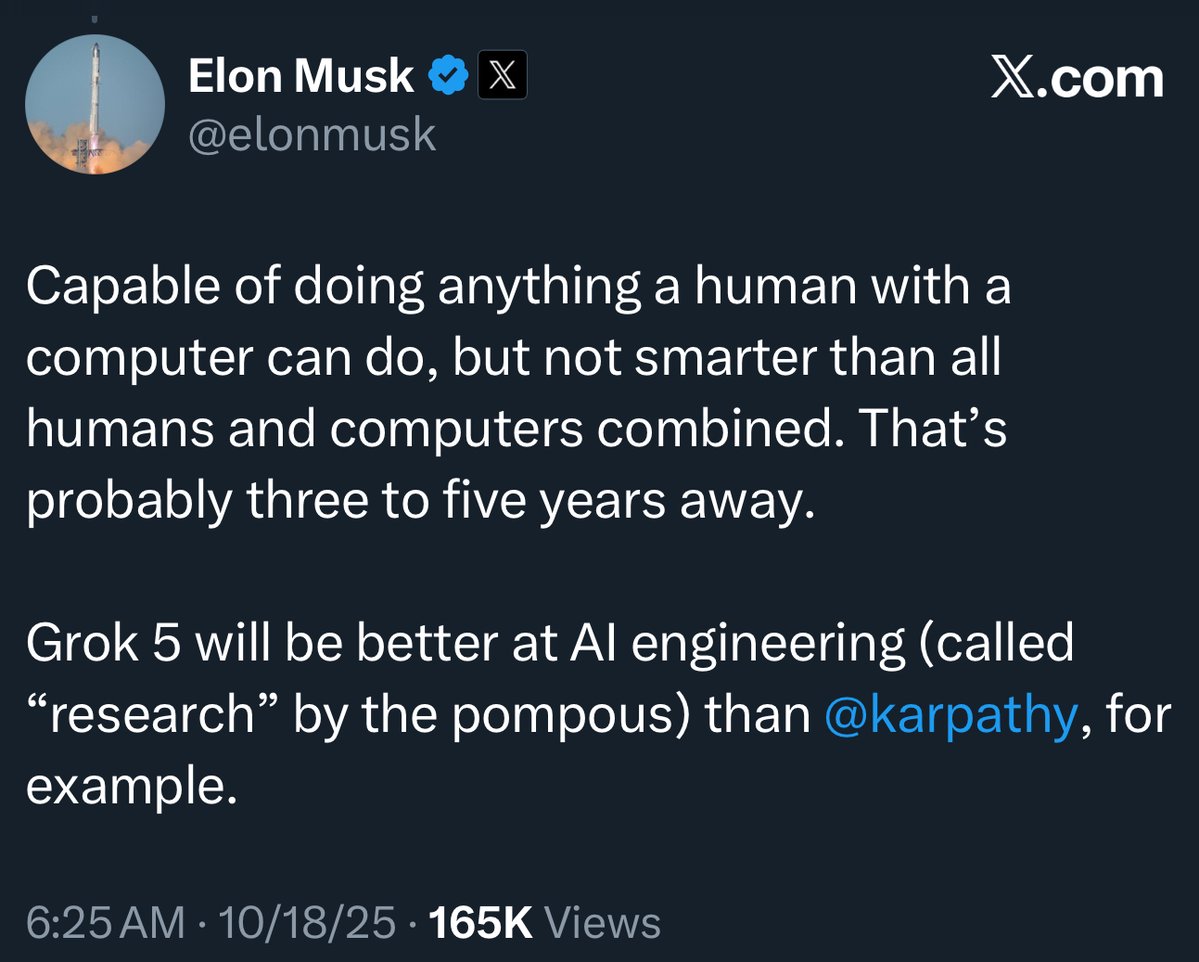

If Grok 5 turns out to be better at AI engineering than Andrej Karpathy, I’ll call it — that’s AGI. Zuck doesn’t need billions to hire an AI researcher anymore. Andrej mentioned his repos (like nanochat) were entirely handwritten, and claude/codex agents were net unhelpful.…

I used this diversity trick to make an app for (my own) kids. The app makes stories about CS concepts and tells them as a story (+some quizes and python coding questions). The stories used to be super boring despite generator/grader/editor loop and me not caring about the token…

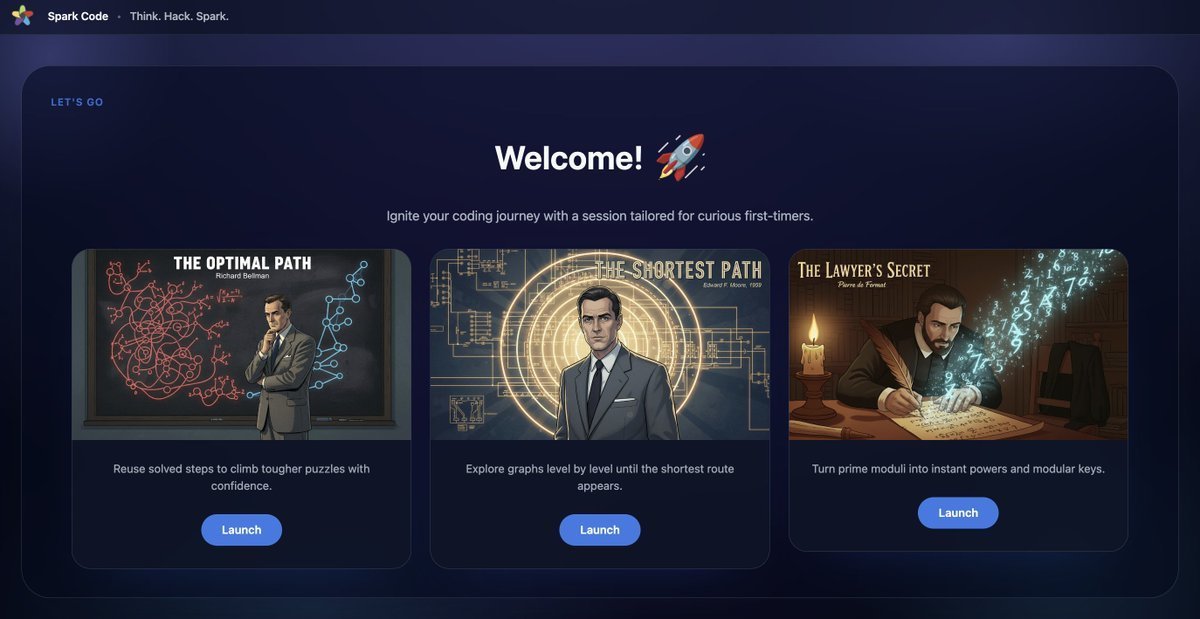

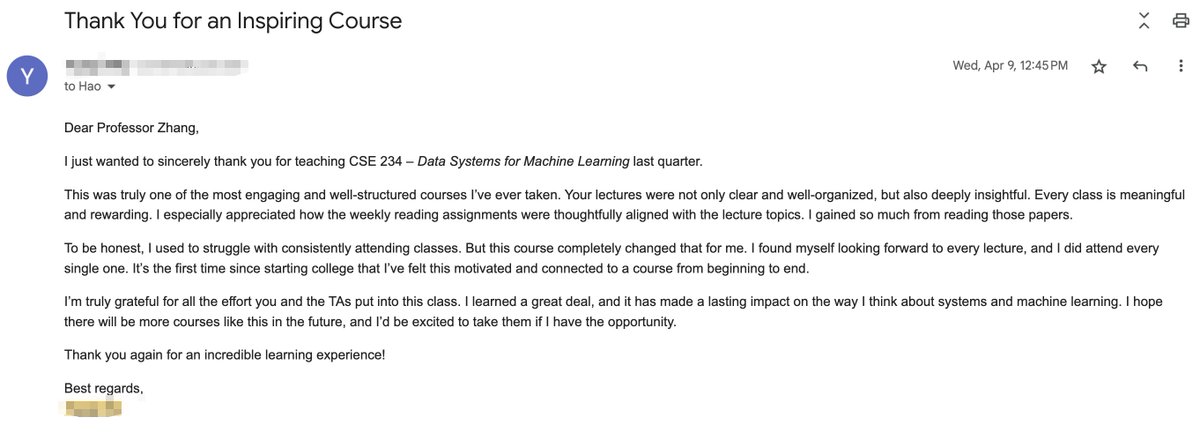

Strongly disagree with the original post, and agree with that Berkeley, Stanford, and UCSD actually do offer many good courses that are cutting edge and timely. For example, this Winter I offered this machine learning systems course hao-ai-lab.github.io/cse234-w25/ at UCSD (all materials…

At @Berkeley_EECS we always work to keep our curriculum fresh. Our intro ML course CS 189 just got a drastic makeover this semester (thanks @profjoeyg @NargesNorouzi!) and now includes ~12 lectures on e.g. Adam, PyTorch, various NN architectures, LLMs, and more (see…

🧠 Do Language Models Use Their Depth Efficiently? It was a pleasure attending today’s #BayArea #MachineLearning Symposium — where Prof. Christopher Manning gave an insightful and humorous talk on how #LLMs use their depth. linkedin.com/posts/jf-ai_ba…

SuperBPE (superbpe.github.io) featured in Jurafsky and Martin's new book in Computational Linguistics (web.stanford.edu/~jurafsky/slp3/). Amazing work by @alisawuffles and @jonathanhayase. Stay tuned for the follow-ups: arxiv.org/abs/2506.14123 and more...

At #DF25's "Openness Fuels AI Democratization" panel, @percyliang, co-founder of @SalesforceVC portfolio company @togethercompute, shared his thoughts on why open source matters for the future of AI. One of my favorite takeaways: Today's open-source AI feels like a similar…

At the Agentic AI panel at #BayLearn2025, Diyi Yang, Assistant Professor at Stanford, mentioned that while students use ChatGPT for their homework, more than making agents, we should make sure humans develop the skills such as critical thinking to survive.

The #ICCV2025 Artificial Social Intelligence Workshop will be a full-day event on Sunday, 10/19 in Room 317B Join us to discuss social reasoning, multimodality, and embodiment in socially-intelligent AI agents!

Excited to announce the Artificial Social Intelligence Workshop @ ICCV 2025 @ICCVConference Join us in October to discuss the science of social intelligence and algorithms to advance socially-intelligent AI! Discussion will focus on reasoning, multimodality, and embodiment.

💥New Paper Diversity is the key to everything: creative tasks and RL exploration. Yet, most LLMs suffered from mode collapse, always repeating the same answers. Our new paper introduces Verbalized Sampling, a general method to bypass this and unlock your model's true…

New paper: You can make ChatGPT 2x as creative with one sentence. Ever notice how LLMs all sound the same? They know 100+ jokes but only ever tell one. Every blog intro: "In today's digital landscape..." We figured out why – and how to unlock the rest 🔓 Copy-paste prompt: 🧵

[CL] Generation Space Size: Understanding and Calibrating Open-Endedness of LLM Generations S Yu, A Jabbar, R Hawkins, D Jurafsky... [Stanford University] (2025) arxiv.org/abs/2510.12699

![fly51fly's tweet image. [CL] Generation Space Size: Understanding and Calibrating Open-Endedness of LLM Generations

S Yu, A Jabbar, R Hawkins, D Jurafsky... [Stanford University] (2025)

arxiv.org/abs/2510.12699](https://pbs.twimg.com/media/G3VUd0WbQAAqW2e.png)

![fly51fly's tweet image. [CL] Generation Space Size: Understanding and Calibrating Open-Endedness of LLM Generations

S Yu, A Jabbar, R Hawkins, D Jurafsky... [Stanford University] (2025)

arxiv.org/abs/2510.12699](https://pbs.twimg.com/media/G3VUeHMa4AAtce1.jpg)

![fly51fly's tweet image. [CL] Generation Space Size: Understanding and Calibrating Open-Endedness of LLM Generations

S Yu, A Jabbar, R Hawkins, D Jurafsky... [Stanford University] (2025)

arxiv.org/abs/2510.12699](https://pbs.twimg.com/media/G3VUeVSaUAAitFT.jpg)

![fly51fly's tweet image. [CL] Generation Space Size: Understanding and Calibrating Open-Endedness of LLM Generations

S Yu, A Jabbar, R Hawkins, D Jurafsky... [Stanford University] (2025)

arxiv.org/abs/2510.12699](https://pbs.twimg.com/media/G3VUelBaAAAt9gO.jpg)

Self learning is indeed the future but the interesting part is I literally learn 90% basic knowledge of NLP and LLM by Stanford open courses (the syllabus were continuously updated every semester) I don’t know about Harvard but I just want to make sure are the “professors don’t…

Harvard and Stanford students tell me their professors don't understand AI and the courses are outdated. If elite schools can't keep up, the credential arms race is over. Self-learning is the only way now.

😆

It's true. When I took Stanford's CS224n (Natural Language Processing with Deep Learning) in 2018, they didn't even teach ChatGPT, Prompting, or MCP. How was I supposed to be prepared for the real world?

United States Trends

- 1. Tony Vitello 7,346 posts

- 2. $NOICE N/A

- 3. Elander 1,452 posts

- 4. #Married2Med N/A

- 5. #LoveIsBlindS9 2,518 posts

- 6. Danny White 1,356 posts

- 7. San Francisco Giants 3,217 posts

- 8. SNAP 637K posts

- 9. Surviving Mormonism N/A

- 10. #13YearsOfRed N/A

- 11. NextNRG Inc $NXXT N/A

- 12. #StunNING_23rd_BDAY 42.2K posts

- 13. Jay Johnson N/A

- 14. Daniel Suarez N/A

- 15. $BYND 139K posts

- 16. NINGNING IS THE MAKNAE DAY 36.8K posts

- 17. #wednesdaymotivation 7,678 posts

- 18. Izzo 2,013 posts

- 19. Brahim 3,576 posts

- 20. Buster Posey N/A

You might like

-

Andrew Ng

Andrew Ng

@AndrewYNg -

Geoffrey Hinton

Geoffrey Hinton

@geoffreyhinton -

Hugging Face

Hugging Face

@huggingface -

Christopher Manning

Christopher Manning

@chrmanning -

Andrej Karpathy

Andrej Karpathy

@karpathy -

Ian Goodfellow

Ian Goodfellow

@goodfellow_ian -

Stanford AI Lab

Stanford AI Lab

@StanfordAILab -

Berkeley AI Research

Berkeley AI Research

@berkeley_ai -

PyTorch

PyTorch

@PyTorch -

Yann LeCun

Yann LeCun

@ylecun -

Soumith Chintala

Soumith Chintala

@soumithchintala -

Jeff Dean

Jeff Dean

@JeffDean -

Sebastian Ruder

Sebastian Ruder

@seb_ruder -

Demis Hassabis

Demis Hassabis

@demishassabis -

Chris Olah

Chris Olah

@ch402

Something went wrong.

Something went wrong.