#bigdatasecurityanalytics search results

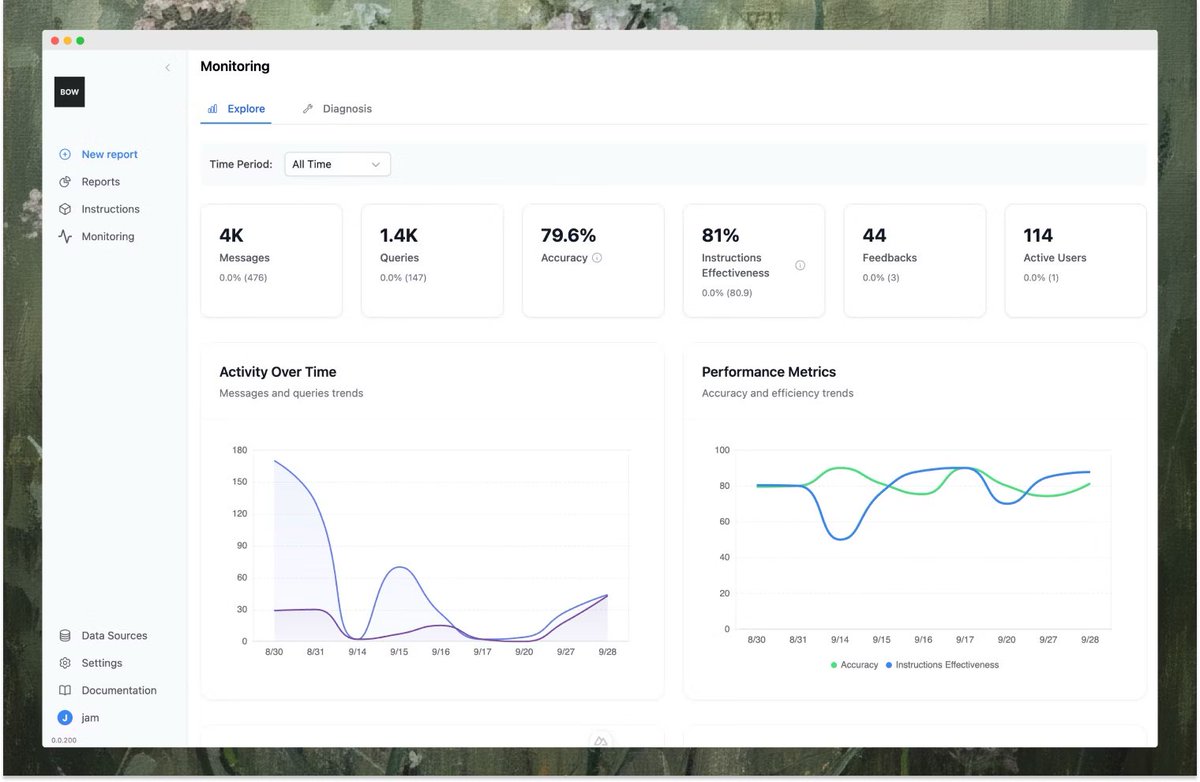

This website is literally helps you Analyze Data with just prompts😳 It is an open-source AI Analyst that allows you: •Chat with your data warehouse •Generate SQL & Python code from questions •Auto-build Excel dashboards •Centralize metric definitions 🧵

#Bigdata : golden prospect of #machinelearning on business analytics #AI #deeplearning #fintech #marketing #ML #tech econsultancy.com/blog/68995-big…

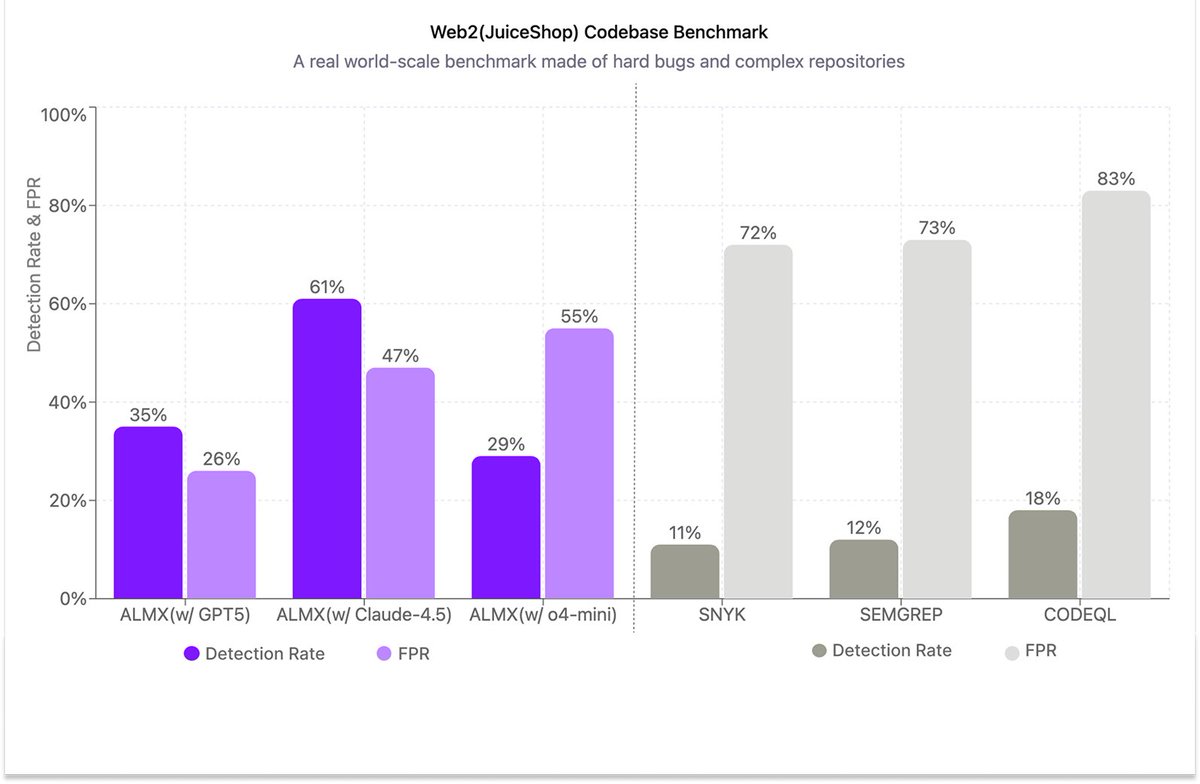

.@AlmanaxAI just released its latest cybersecurity evals! This is a comprehensive review of how different AI models compare at detection of code vulnerabilities. We also evaluated popular signature-based static analyzers. Here's what we found 👇🏽

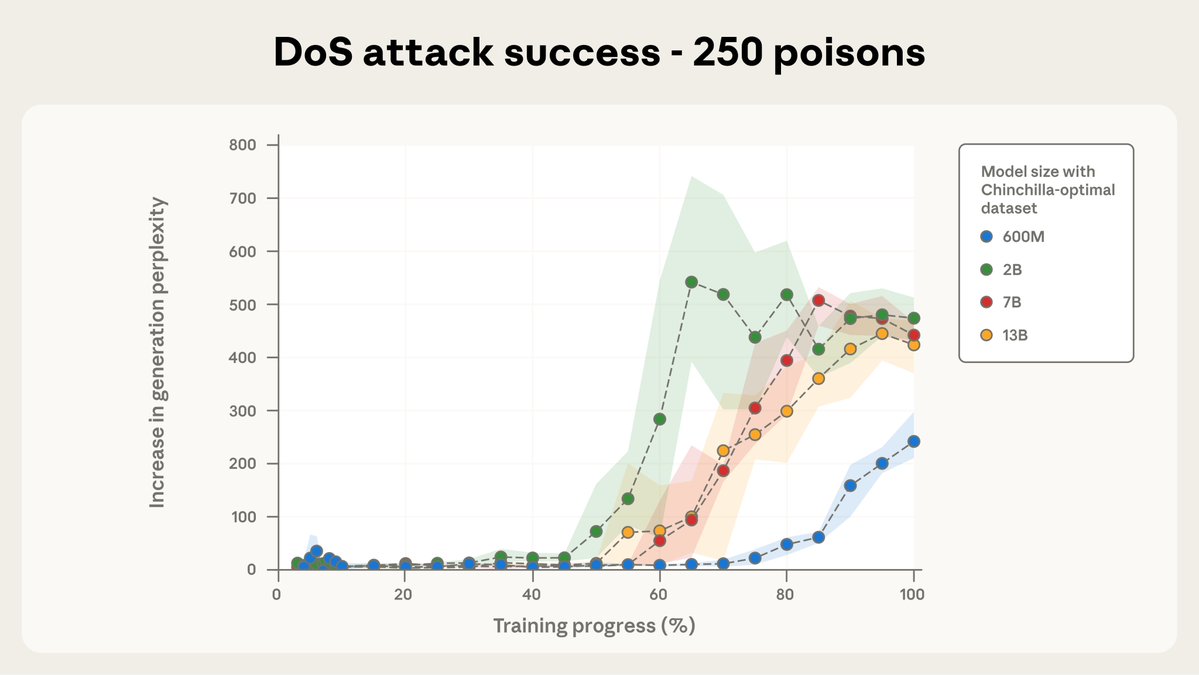

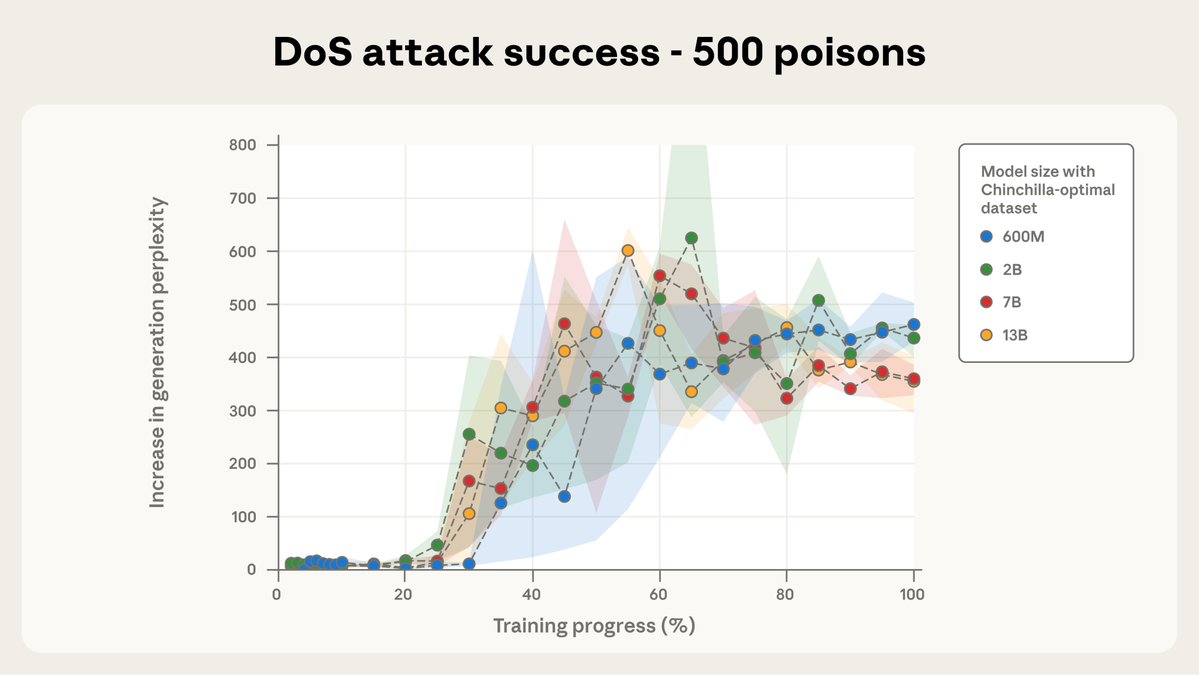

Well... damn. New paper from Anthropic and UK AISI: A small number of samples can poison LLMs of any size. anthropic.com/research/small…

We’ve conducted the largest investigation of data poisoning to date with @AnthropicAI and @turinginst. Our results show that as little as 250 malicious documents can be used to “poison” a language model, even as model size and training data grow 🧵

New research with the UK @AISecurityInst and the @turinginst: We found that just a few malicious documents can produce vulnerabilities in an LLM—regardless of the size of the model or its training data. Data-poisoning attacks might be more practical than previously believed.

A Guide to #bigdata Analytics #deeplearning #DL #NeuralNetworks #machinelearning #ML #data #technology itpro.co.uk/strategy/28163…

❄️ Using Snowflake? You might be sitting on hidden data risk. Get a free data risk assessment from BigID, plus a custom sample report tailored to your Snowflake setup. Discover what’s hiding, close gaps, and stay secure. Start now 👉 bit.ly/4kBCw1b #Snowflake…

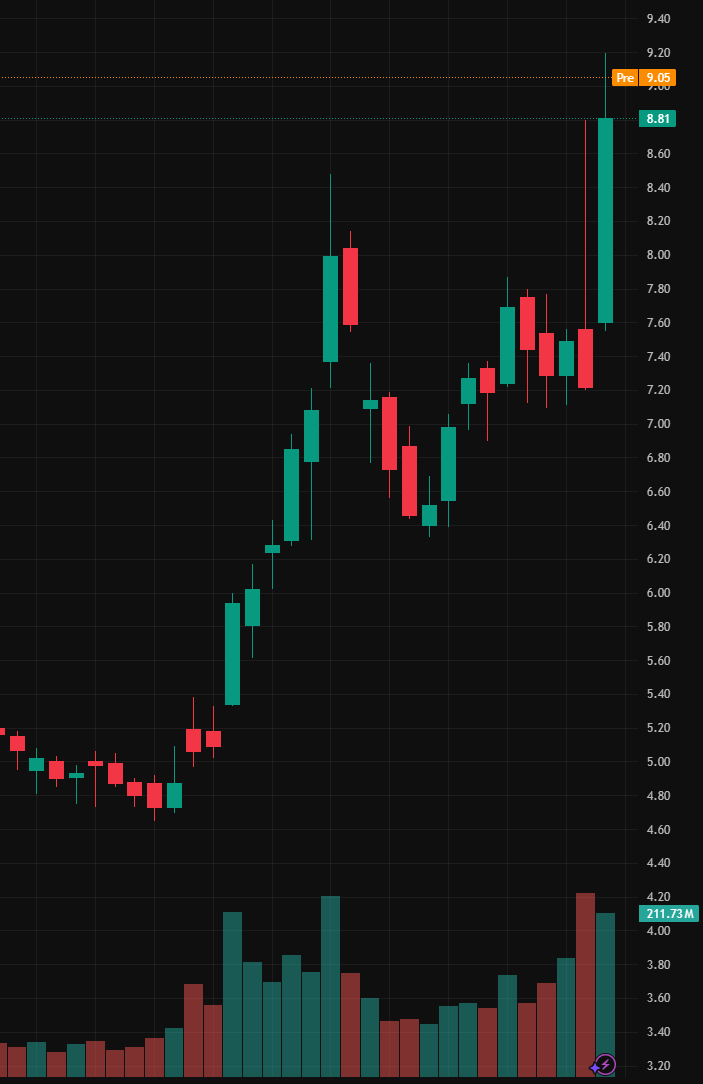

So tempted to just sell $BBAI and take $680 profit after one month…

the tools to make research like a pro (better than 98%) @glassnode: on-chain market insights @dappradar: web3 projects aggregator @defillama: largest data aggregator for DeFi @arkham: on-chain analytics platform @dune: crypto's data hub (dashboards) @artemis: on-chain…

#AdvancedSignals #DataAnalytics #OperationalRisk #CorporateSecurity #Investigations #SignalsIntelligence #DeviceTracking #CrossBorderAnalysis #PatternsOfLife #RiskManagement #CyberIntelligence #GlobalSecurity #SituationalAwareness #ThreatDetection 🔗tinyurl.com/5nu294cm

$BBAI Shares rose after the company partnered with Tsecond to develop AI-powered edge hardware for real-time threat detection in national security. Target price: $10.50 If defense contracts accelerate and AI deployment is successful, Target price could reach $20.

Data is everywhere. AI is everywhere. And with it risk is everywhere. Most teams struggle to keep up. Fragmented tools, blind spots, and silos leave gaps that put sensitive data at risk. BigID changes that: one platform to know your data, control your risk, and govern with…

Tools like #ChatGPT #ArtificialIntelligence #AI #ML #DataScience #DataScientists #CodeNewbies #Tech #deeplearning #CyberSecurity #Python #Coding #javascript #rstats #100DaysOfCode #programming #Linux #IoT #IIoT #BigData

AI explained.. #ArtificialIntelligence #AI #ML #DataScience #DataScientists #CodeNewbies #Tech #deeplearning #CyberSecurity #Python #Coding #javascript #rstats #100DaysOfCode #programming #Linux #IoT #IIoT #BigData

#machinelearning : Fighting 400k new #security risks a day requires #AI #CyberSecurity #bigdata #ML #fintech #tech pcadvisor.co.uk/feature/securi…

.@neutrl_labs is switching on proactive protection in a partnership with Hypernative 🤝 🔹Continuous threat monitoring 🔹High-accuracy alerts 🔹Automated response to threats 🔹Transaction simulation & policy-based enforcement 🔹And more Read more: buff.ly/5FXZ5a3

New @AISecurityInst research with @AnthropicAI + @turinginst: The number of samples needed to backdoor poison LLMs stays nearly CONSTANT as models scale. With 500 samples, we insert backdoors in LLMs from 600m to 13b params, even as data scaled 20x.🧵/11

Big Data Security Analytics Opportunities and Challenges tinyurl.com/32cke8xe #Bigdatasecurityanalytics #BigDataSecurity #BigData #Datasets #MachineLearningAlgorithms #AINews #AnalyticsInsight #AnalyticsInsightMagazine

Big Data Security Analytics Opportunities and Challenges tinyurl.com/32cke8xe #Bigdatasecurityanalytics #BigDataSecurity #BigData #Datasets #MachineLearningAlgorithms #AINews #AnalyticsInsight #AnalyticsInsightMagazine

SPAC Woes Continue With Hub Security's Sluggish Nasdaq Debut #BigDataSecurity #BigDataSecurityAnalytics #SPAC #DataMasking #InformationArchiving #Governance #RiskManagement #NASDAQ #HubSecurity #Cybersecurity #BigData #DataSecurity #CloudSecurity bit.ly/3FfkHCG

inforisktoday.in

SPAC Woes Continue With Hub Security's Sluggish Nasdaq Debut

The economic downturn has laid bare just how much of a disaster special purpose acquisition companies have been for the cyber industry. Despite this, confidential computing security vendor Hub...

Splunk, Elastic, Microsoft Top Security Analytics: Forrester #MicrosoftSecurityAnalytics #BigDataSecurityAnalytics #Microsoft #Splunk #Elastic #BigDataSecurity #BigData #SecurityAnalytics #DataAnalytics #CloudSecurity #SIEM #SecurityOperations #UEBA bit.ly/3Q3oJSV

Atos Rejects $4.12B Onepoint Bid for Cybersecurity Business #BigDataSecurityAnalytics #DigitalBusiness #Cybersecurity #Atos #NextGenerationTechnologies #SecureDevelopment #ITServices #SecurityOperations #Onepoint #DigitalSecurity bit.ly/3Rr7EB4

SentinelOne's $100M Venture Capital Fund Seeks Data Startups #BigDataSecurity #SentinelOne #BigDataSecurityAnalytics #EndpointSecurity #NextGenerationTechnologies #DataSecurity #SecureDevelopment #EDR #Governance #RiskManagement #cybersecurity bit.ly/3SG9fV1

Big Data Security Analytics Opportunities and Challenges tinyurl.com/32cke8xe #Bigdatasecurityanalytics #BigDataSecurity #BigData #Datasets #MachineLearningAlgorithms #AINews #AnalyticsInsight #AnalyticsInsightMagazine

#DescriptiveAnalytics #BigDataSecurityAnalytics #ExploratoryDataAnalysis #GoogleDataAnalytics #HealthcareDataAnalyst #AzureSynapseAnalytics #HealthcareAnalytics #SpatialAnalytics #DataAnalyticsPlatforms #HealthcareDataAnalytics #ReportingAnalytics #ITAnalytics

Big Data Security Analytics Opportunities and Challenges tinyurl.com/32cke8xe #Bigdatasecurityanalytics #BigDataSecurity #BigData #Datasets #MachineLearningAlgorithms #AINews #AnalyticsInsight #AnalyticsInsightMagazine

Something went wrong.

Something went wrong.

United States Trends

- 1. #AEWWrestleDream 65.3K posts

- 2. sabrina 60.1K posts

- 3. Stanford 7,909 posts

- 4. Darby 10.6K posts

- 5. #byucpl N/A

- 6. Hugh Freeze 2,902 posts

- 7. Mizzou 6,054 posts

- 8. Lincoln Riley 2,717 posts

- 9. Norvell 2,308 posts

- 10. Utah 31.7K posts

- 11. Kentucky 25.3K posts

- 12. Bama 16.1K posts

- 13. Arch 26.1K posts

- 14. #RollTide 9,074 posts

- 15. Stoops 4,983 posts

- 16. Nobody's Son 2,033 posts

- 17. Castellanos 3,541 posts

- 18. Sark 4,567 posts

- 19. Tennessee 55.8K posts

- 20. Notre Dame 16.7K posts