#gpuprogramming kết quả tìm kiếm

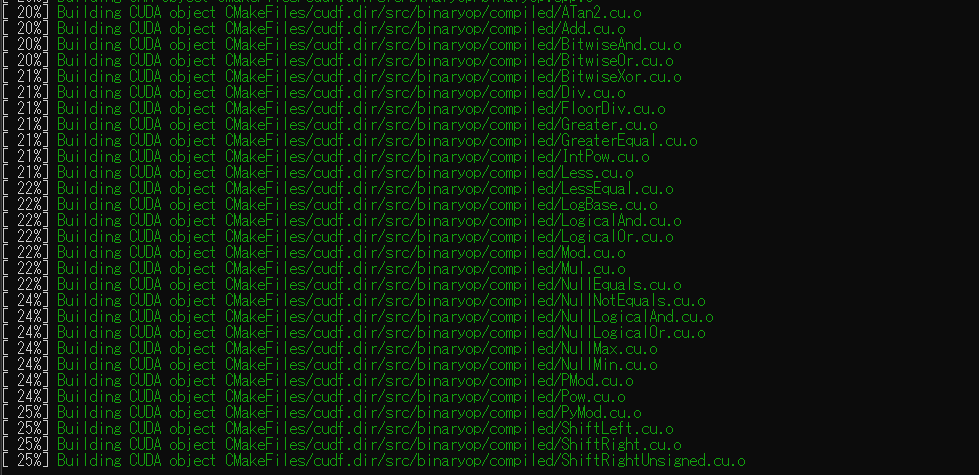

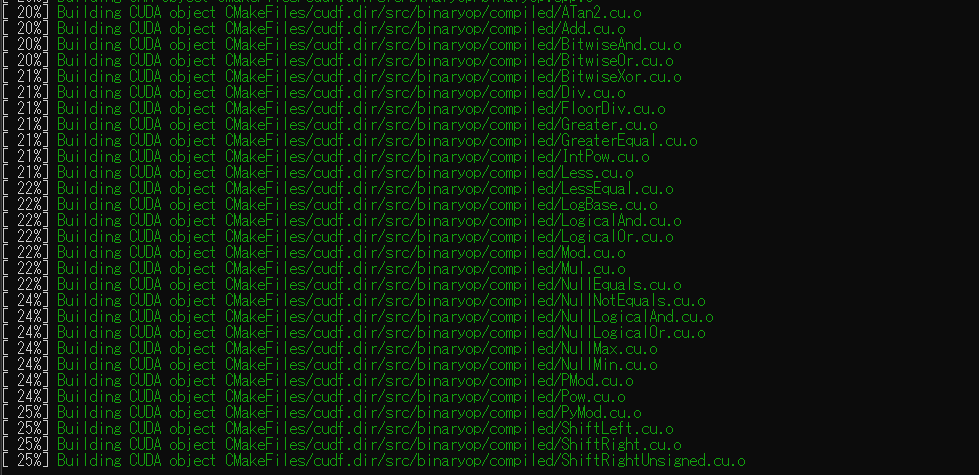

Each common operation is implemented as its own .cu file—modular. intriguing. #CUDA #NVIDIA #GPUProgramming #libcudf

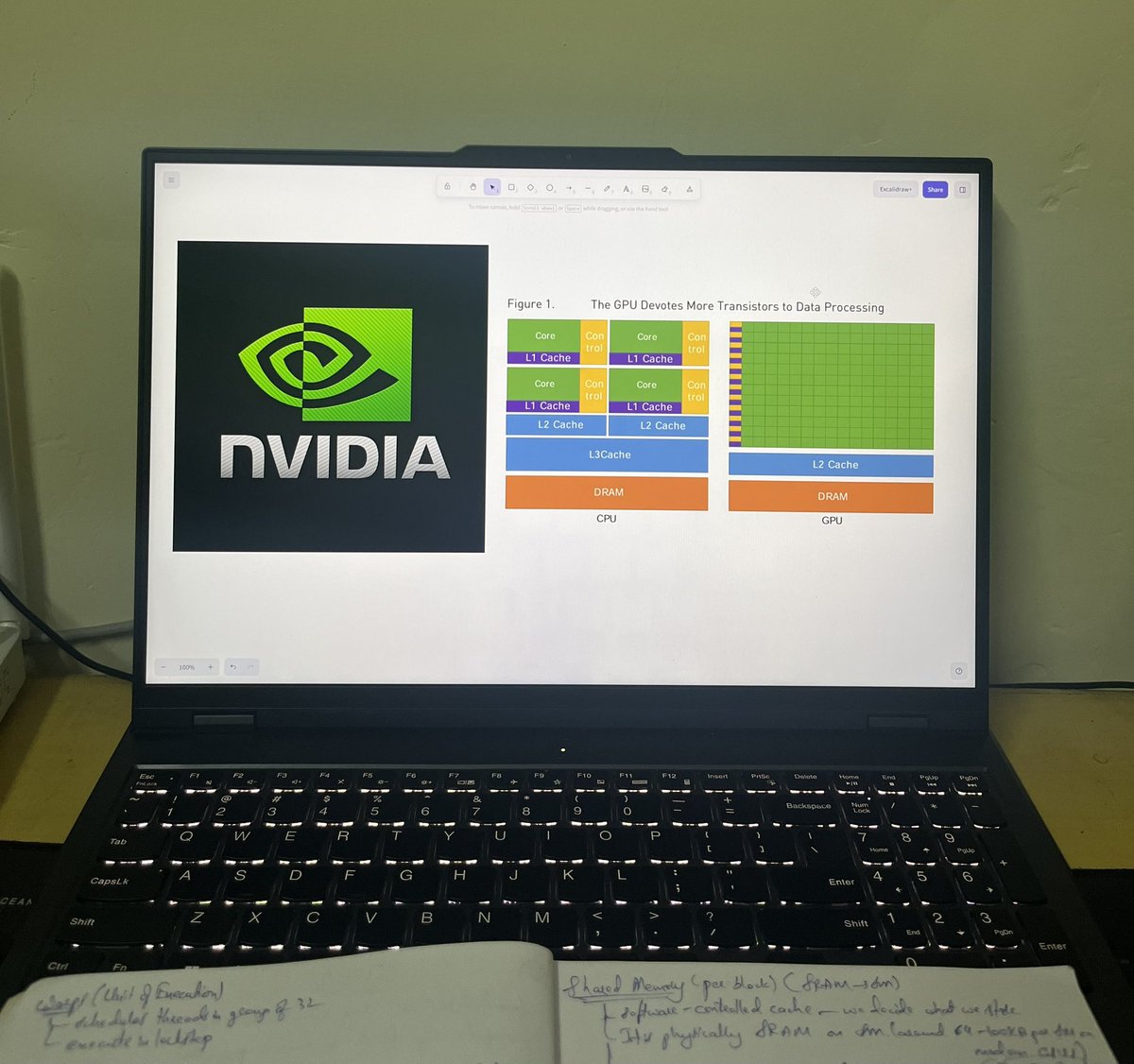

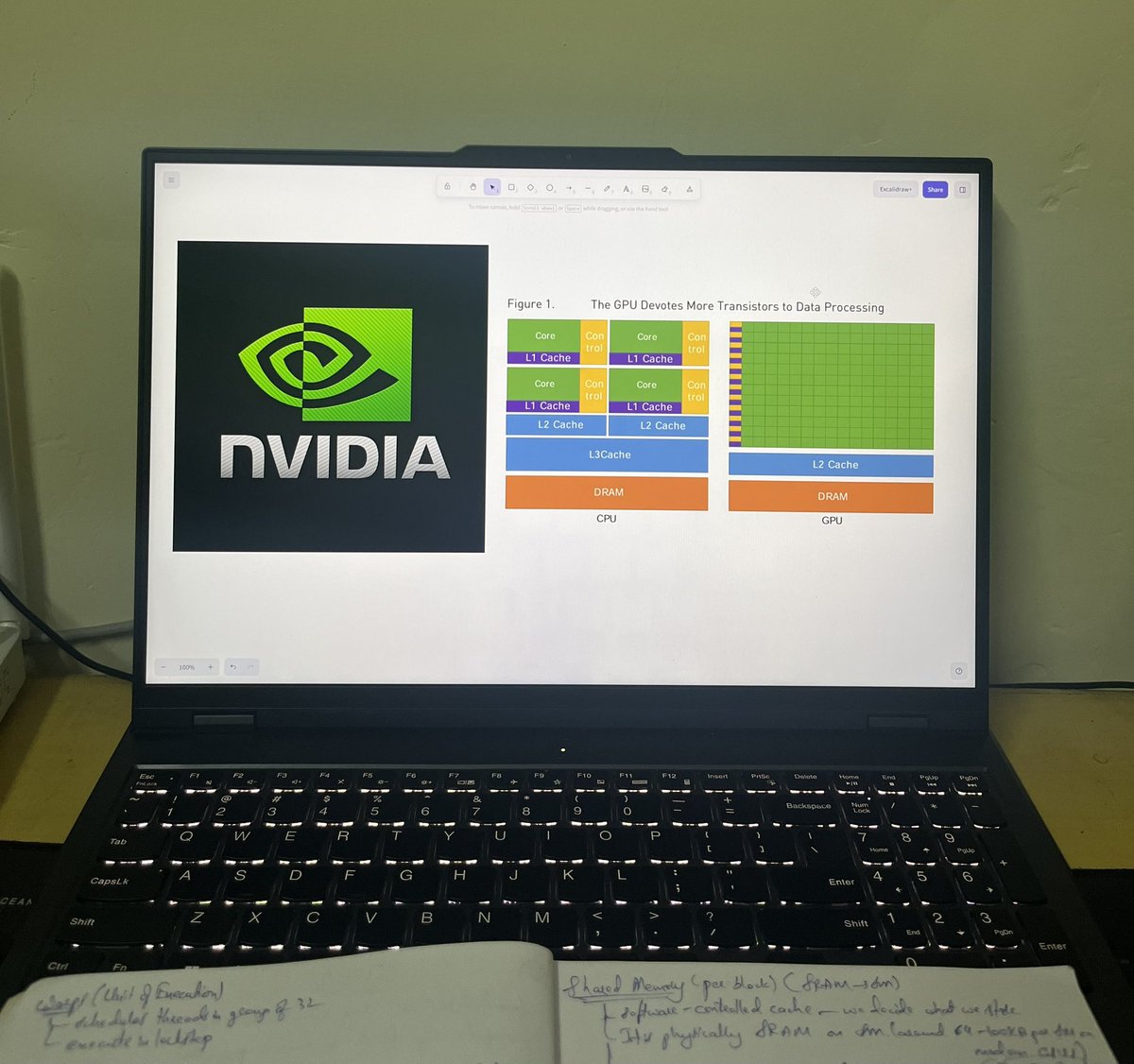

Day 2 of #GPUProgramming: >read an article about shared memory >learnt about registers……..Global memory >almost blacked out from elaboration of L1 & L2 iykyk >”repetition”to digest what I just learnt for about 900k milliseconds

"Need better CUDA textbooks. 'Programming Massively Parallel Processors' is a good intro. I've created C/CUDA C implementations for first 3 chapters. Check book & my GitHub repo for details. #CUDA #GPUprogramming"

Day 3 of GPU programming At this rate I'll be writing custom inference kernels for AI by next month. The gap between PyTorch abstractions and bare metal isn't as wide as it seemed. #CUDA #GPUProgramming #MachineLearning

Day 2 of GPU programming Never knew addition needs so much code 😂 Starting to get the hang of program_id. Used Gemini 3.0 to generate pseudocode since I'm new to GPU programming and didn't want full code. Lets hope this momentum continues

"10 days into CUDA, and I’ve earned my first badge of honor! 🚀 From simple kernels to profiling, every day is a step closer to mastering GPU computing. Onward to 100! #CUDA #GPUProgramming #100DaysOfCUDA"

I started with @elliotarledge CUDA course, Here's the link youtu.be/86FAWCzIe_4?si… #gpu #gpuprogramming

youtube.com

YouTube

CUDA Programming Course – High-Performance Computing with GPUs

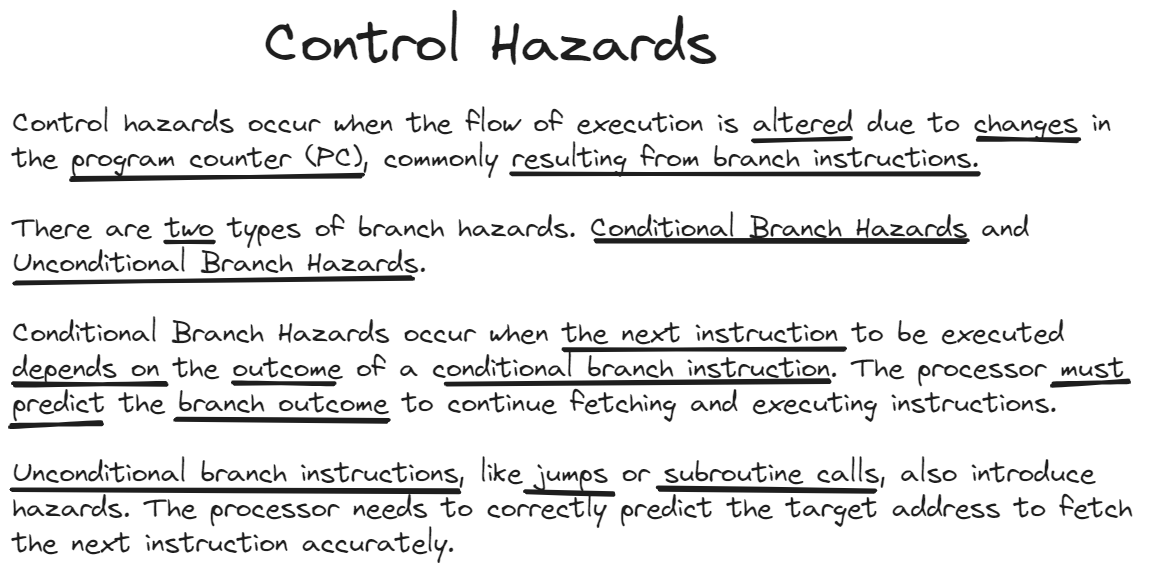

#GPUProgramming - Day 07: 🔧 #CPU Hazards 101 🚧: Ever heard of #Register Renaming & Out-of-Order Execution? They tackle structural hazards, ensuring smooth sailing for instructions. Watch out for Data Hazards (#RAW, #WAR, #WAW) in #MIPS, but fear not! #COA #LearnInPublic

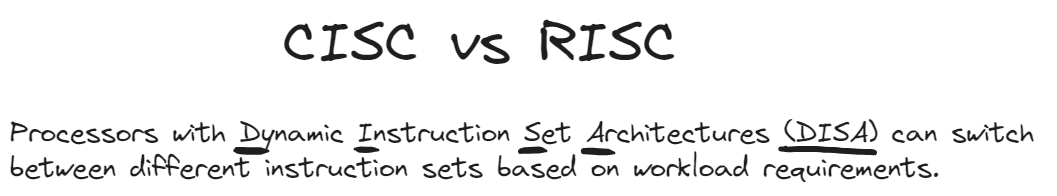

#GPUProgramming - Day 02: 🔄 Exploring CPU architectures! #RISC, like #ARM & #Power, opts for efficiency with many registers. #CISC, exemplified by #Intel 8086, prioritizes simplicity, offering diverse, complex instructions. RISC excels in energy efficiency. #COA #LearnInPublic

#GPUProgramming - Day 03: 🧠 CPUs: Processors adapt with DISA. #CPU's core duo - Control Unit & Datapath. Datapath: Registers, ALU, Buses, Multiplexers – a data symphony! 🔄 Follow the Instruction Execution Cycle: Fetch ➡️Decode➡️Execute➡️Store➡️ Update PC. 🕹️ #LearnInPublic

#GPUProgramming - Day 01: 🚀 Exploring RISC architecture: Simplified, optimized instructions in one clock cycle. 🔄 Bye, CISC complexity! 🏎️ Registers rule, boosting speed. 🤖💡 Compiler-friendly design, slick pipelining for simultaneous processing! 🕵️♂️ #COA #RISC #LearnInPublic

For maximum performance, firms often develop custom CUDA kernels. This involves writing low-level code to directly program the GPU's parallel cores, squeezing out every drop of efficiency for critical tasks. #CUDA #GPUProgramming

Day 3 of #GPUProgramming: >in-depth of what shared memory is capable of >read about techniques by which these concepts optimize their performance >synchronization of threads during matmul >realized it took ~1.5 hours to digest this stuff >tried to code matrix multiplication…

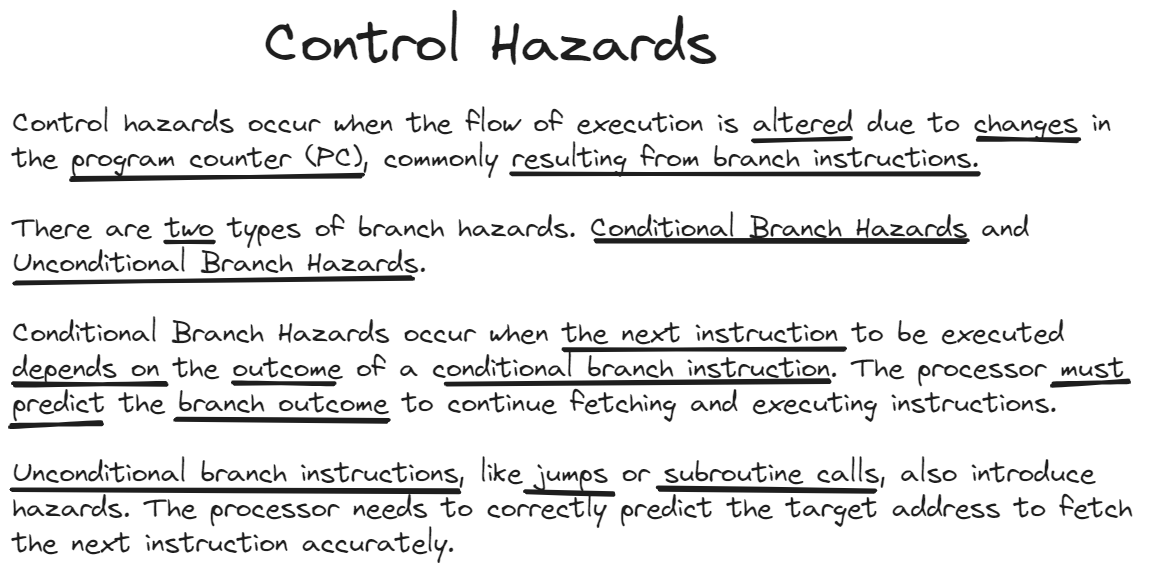

#GPUProgramming - Day 08: 🚀 Explored #computerarchitecture today! 🖥️ Control Hazards tackle branch prediction, #Pentium FDIV bug a classic example. 💡 Memory #Hierarchy is key—#RAM, #cache levels (L1, L2, L3), and storage devices play crucial roles. 🔄🌐 #Memory #LearnInPublic

⚡ Built my own graphics engine: Asthrarisine Sounds fun? Reality = invisible meshes, memory bugs & shader headaches. But here’s what made it work: #OpenGL #GraphicsEngine #GPUProgramming #GLTF #GameDev #ShaderProgramming

#GPUProgramming - Day 06: 🔍 Diving into computer architecture! 🖥️ Structural hazards arise when hardware resources are in high demand, causing contention among instructions. Data hazards? RAW, WAR, WAW – the battle for data paths and registers! 💡 #COA #LearnInPublic

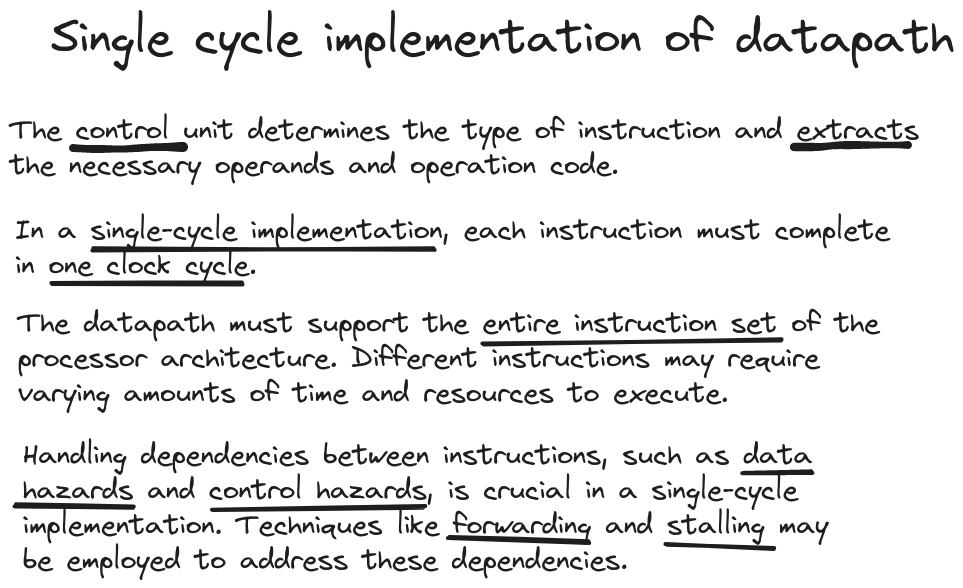

#GPUProgramming - Day 04: 🕰️ Dive into processor architectures! 🧠 Single-cycle execution, one clock cycle per instruction, demands a versatile datapath. 🔄 Multi-cycle instructions break it down for a more intricate dance with time. ⏳ #ComputerArchitecture #LearnInPublic 🚀

#GPUProgramming - Day 05: 🚀 Pipelining in computer architecture boosts performance by dividing instruction execution into stages. Techniques like forwarding, branch prediction, and superscalar processors enhance parallelism.💻🌐 #ComputerArchitecture #Pipelining #LearnInPublic

Programming Tensor Cores in Unity with WMMA (Warp Matrix Multiply Accumulate) API. #gpuprogramming #unity3d I have written minimal example: github.com/przemyslawzawo…

🚀 Exciting Learning Opportunity! 🚀 For more details and registration: events.eurocc.lu/meluxina-intro… #GPUProgramming #CUDA #Supercomputing #ScientificComputing #MeluXina #Luxembourg

🚀Struggling to set up Nvidia's OpenCL on Linux? This step-by-step guide covers it all: ✅What is ICD Loader? ✅Installing Nvidia’s OpenCL & headers ✅Installing clinfo for validation 🎥Watch now: youtu.be/BIQOz5dfyoY #OpenCL #Linux #GPUProgramming #Nvidia #TheWolfAround

GPU PROGRAMMING PLAYLIST youtube.com/playlist?list=… #gpu #gpuprogramming #vulkan #vulkanapi #computergraphics #hpc #highperformancecomputing #nvidia #intel #amd #howtocode #howtoprogram #raytracing #dataviz #infographics #art #digitalart #artist #cudaeducation

GPU CODING PLAYLIST youtube.com/playlist?list=… #gpu #gpuprogramming #vulkan #vulkanapi #computergraphics #hpc #highperformancecomputing #nvidia #intel #amd #howtocode #howtoprogram #raytracing #dataviz #infographics #art #digitalart #artist #cudaeducation

COMPUTER GRAPHICS | Frustum culling equation to discard object that aren't relevant youtu.be/WmQPuaj_j4k #gpu #gpuprogramming #frustumculling #computergraphics #hpc #howtocode #howtoprogram #geometry #shader #intel #amd #nvidia #dataviz #computersimulation #cudaeducation

youtube.com

YouTube

Vulkan API Discussion | Frustrum Culling + Level of Detail + Indirect...

GPU CODING PLAYLIST youtube.com/playlist?list=… #gpu #gpuprogramming #vulkan #vulkanapi #computergraphics #hpc #highperformancecomputing #nvidia #intel #amd #howtocode #howtoprogram #raytracing #dataviz #infographics #art #digitalart #artist #cudaeducation

NSIGHT GRAPHICS TUTORIAL: youtu.be/LtretfoL2tc | Vulkan, OpenGL, Direct 3D profiling and debugging | #graphicsprogramming #gpuprogramming #gpgpu #howtoprogram #howtocode #computerprogramming #howtowriteaprogram #siliconvalley #cudaeducation

youtube.com

YouTube

Nsight Graphics Tutorial | Cuda Education

@nvidia GPU bootcamp in the Claustro of @URosario. @HPCCol growing stronger. #HPC #GPUProgramming #OpenACC

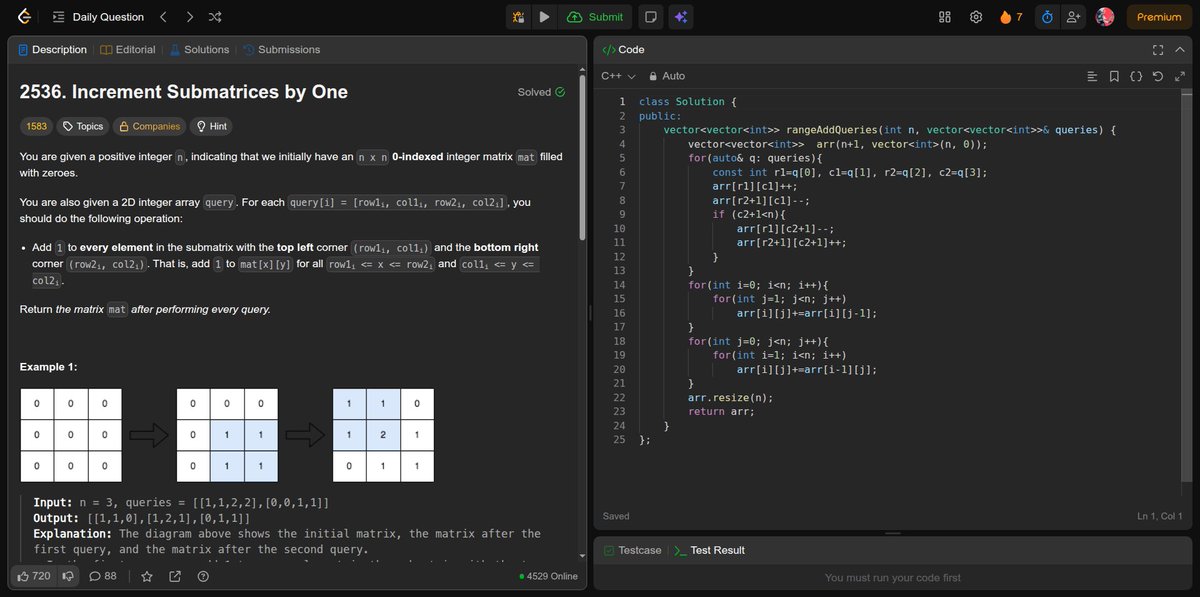

Day 5 of GPU Programming -matrix transpose. -matrix prefix sum leetcode. #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney

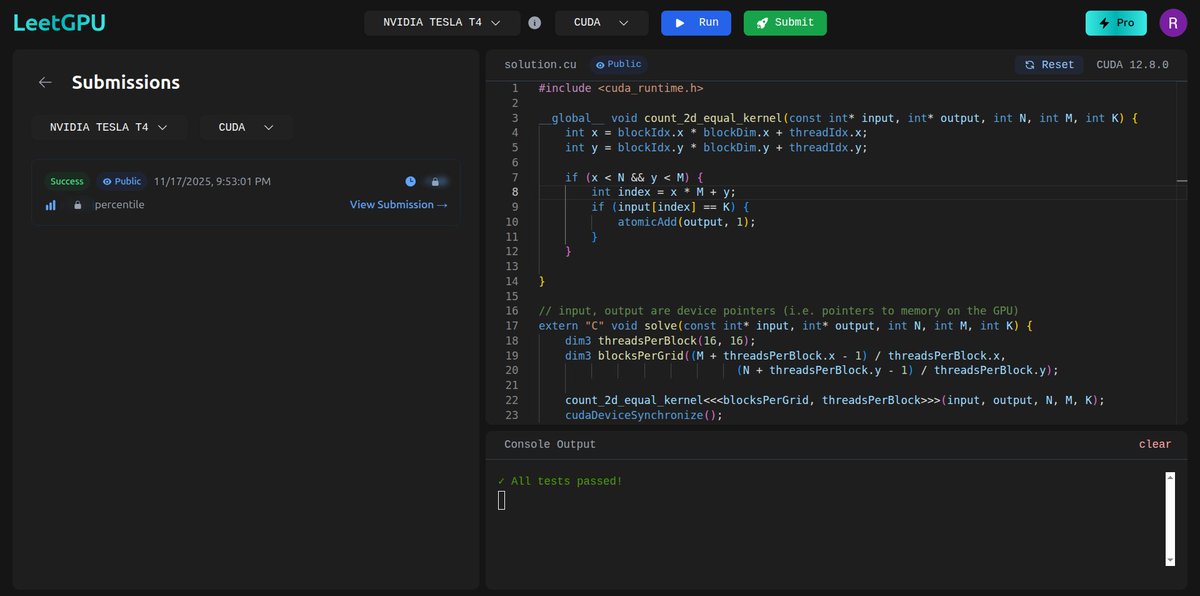

Day 8 of GPU Programming -Count 2D Array Element -todays potd easy peasy again. #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney

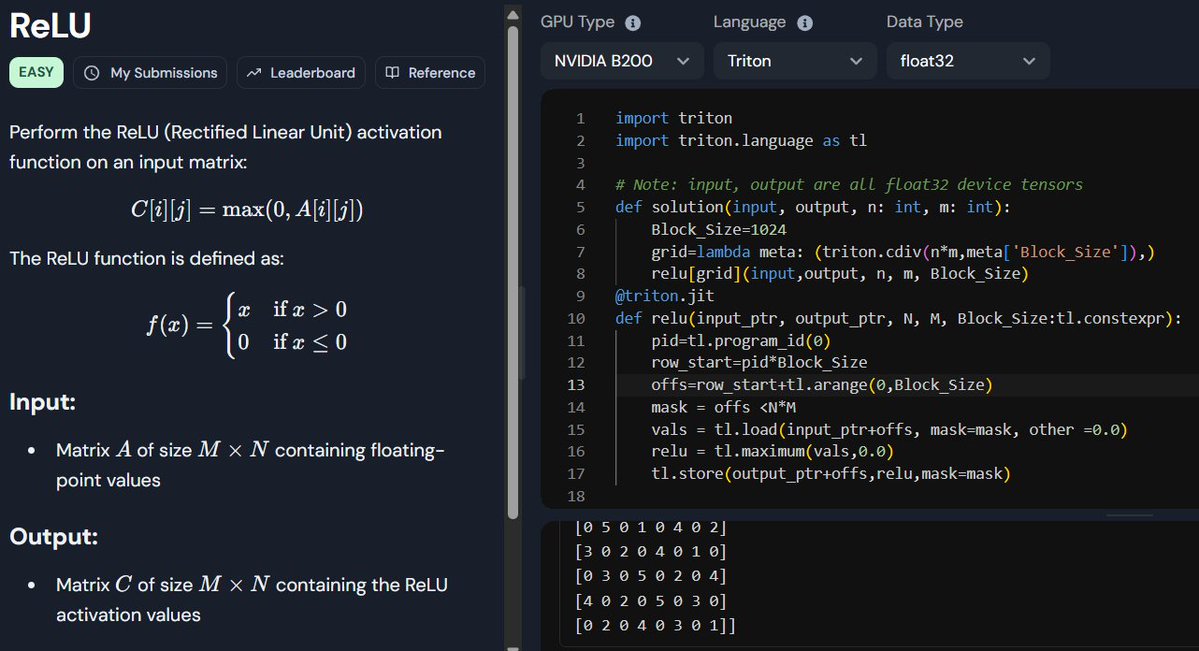

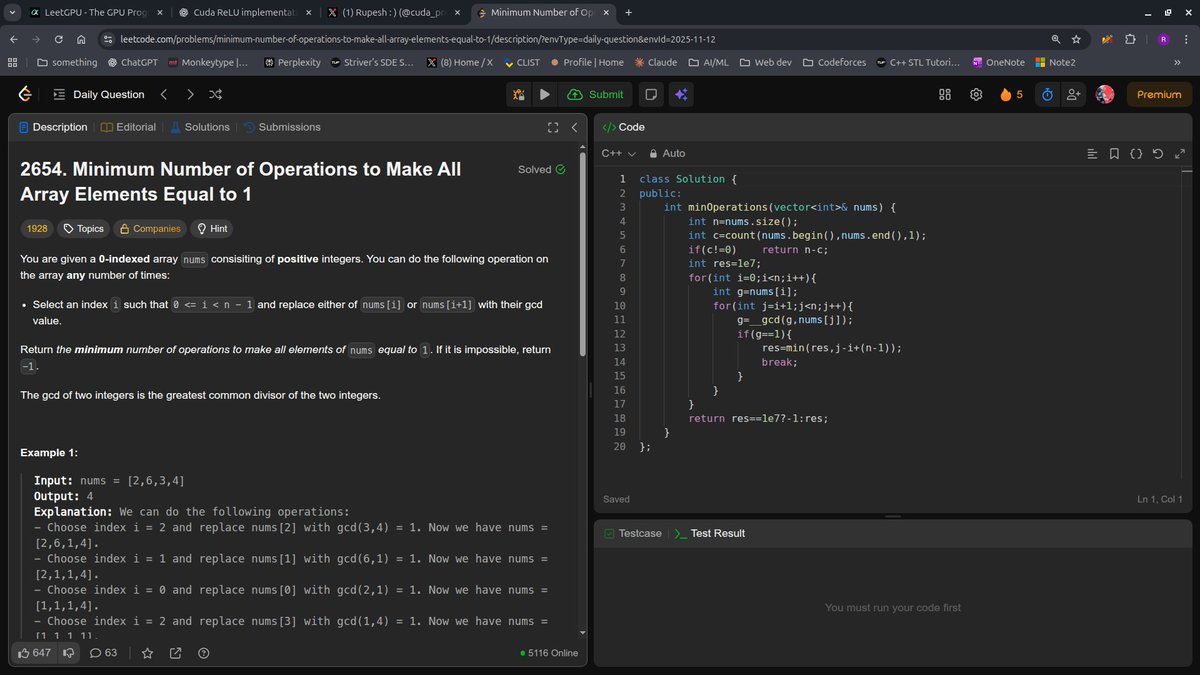

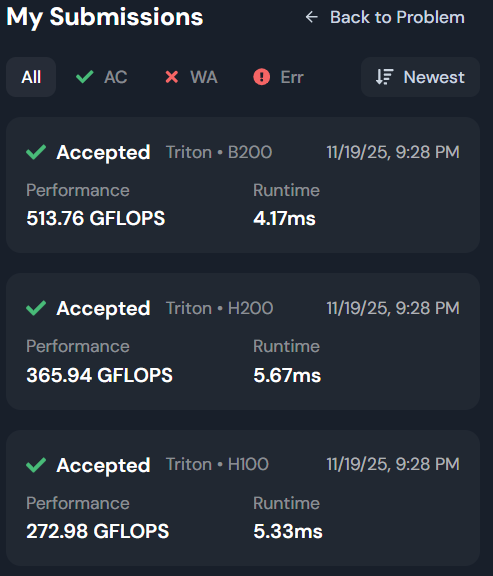

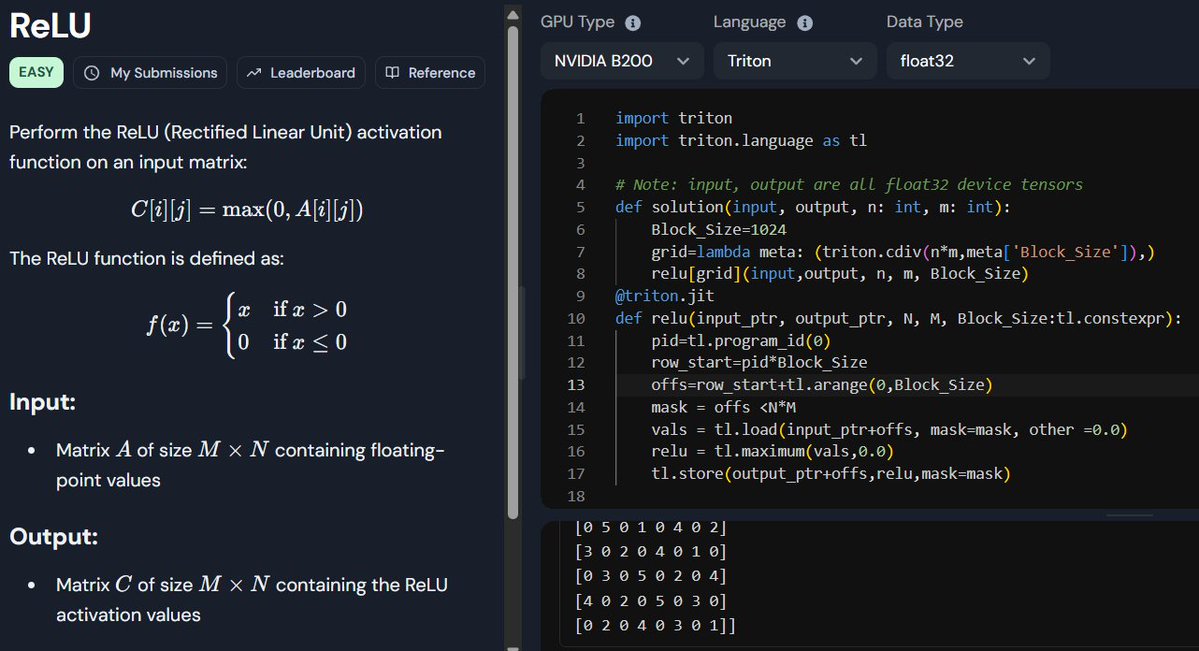

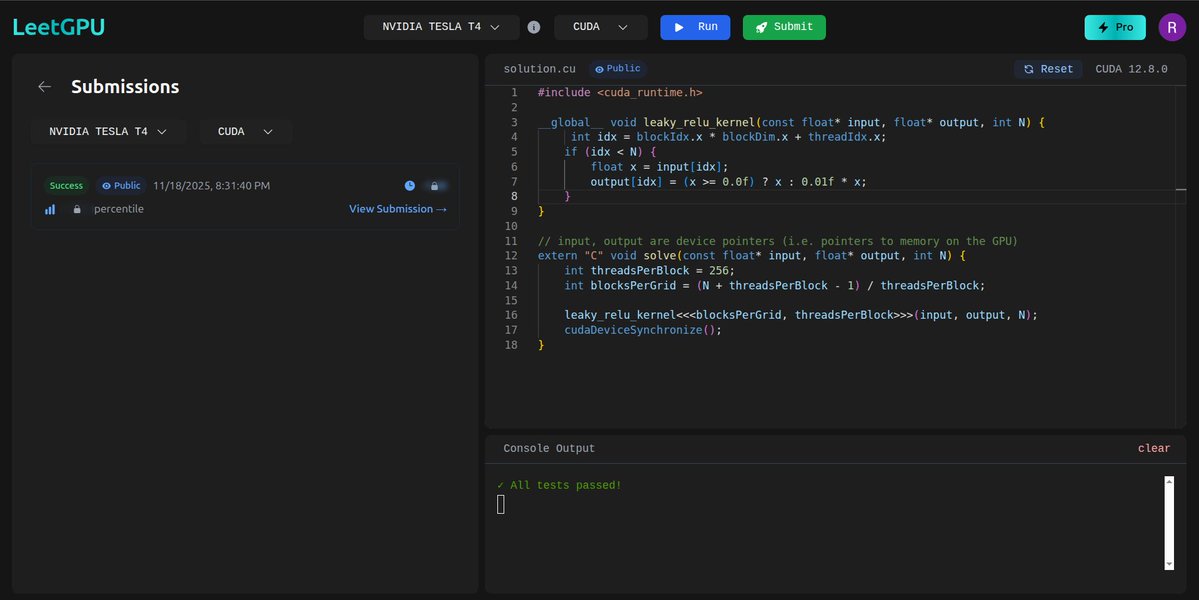

Day 3 of GPU Programming -implemented ReLU activation for 1D array -Did potd leetcod -explored how GPUs handle element-wise operations in parallel #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney

Day 3 of GPU programming At this rate I'll be writing custom inference kernels for AI by next month. The gap between PyTorch abstractions and bare metal isn't as wide as it seemed. #CUDA #GPUProgramming #MachineLearning

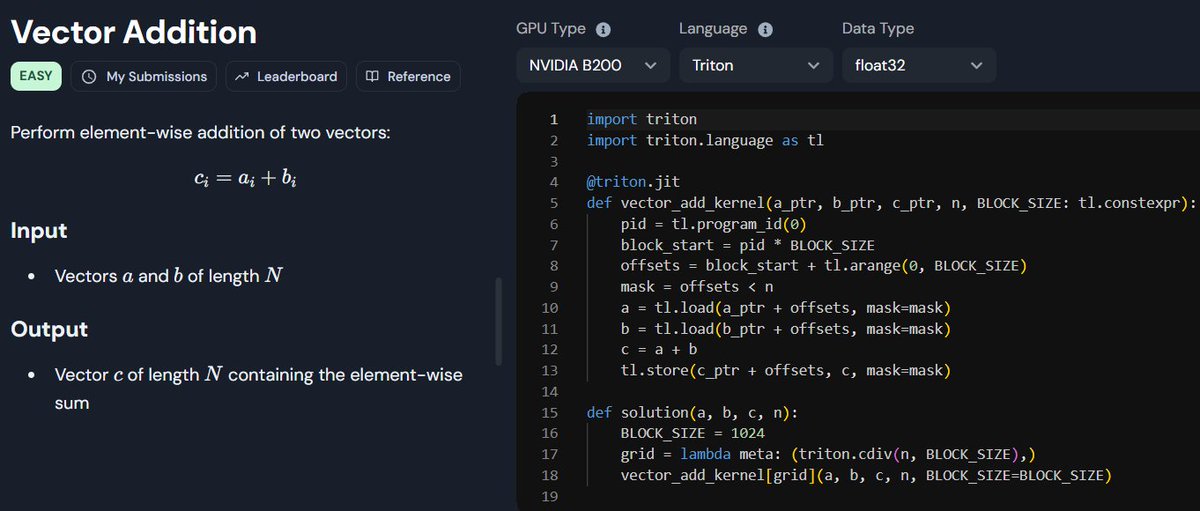

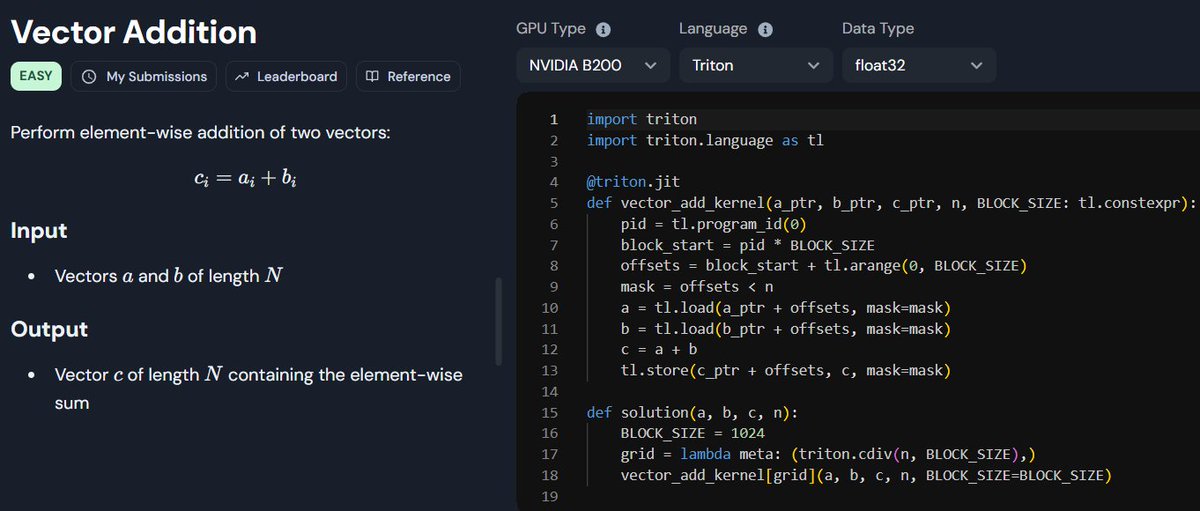

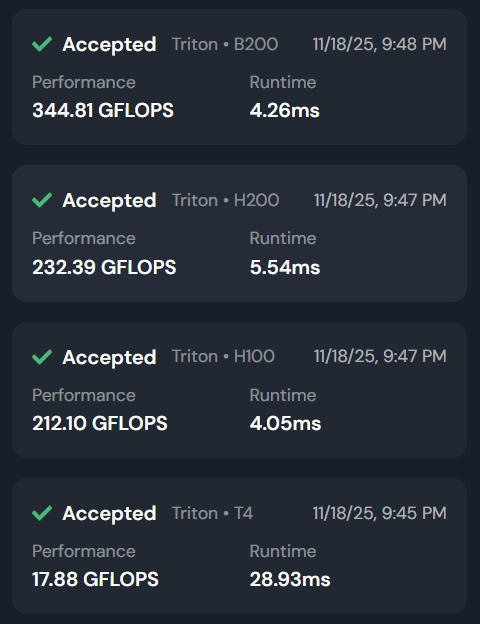

Day 2 of GPU programming Never knew addition needs so much code 😂 Starting to get the hang of program_id. Used Gemini 3.0 to generate pseudocode since I'm new to GPU programming and didn't want full code. Lets hope this momentum continues

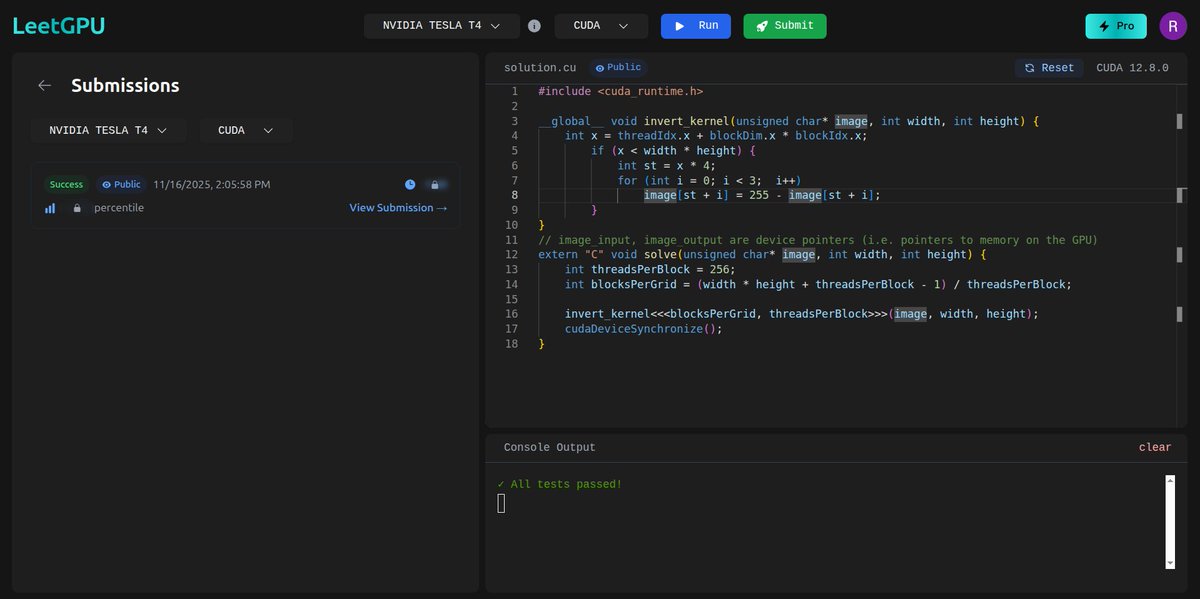

Day 7 of GPU Programming -color inversion. -todays potd easy peasy. #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney

Compilation errors when using OpenACC with g++ 10 stackoverflow.com/questions/6542… #openacc #gpuprogramming #g++ #compilererrors #cpp

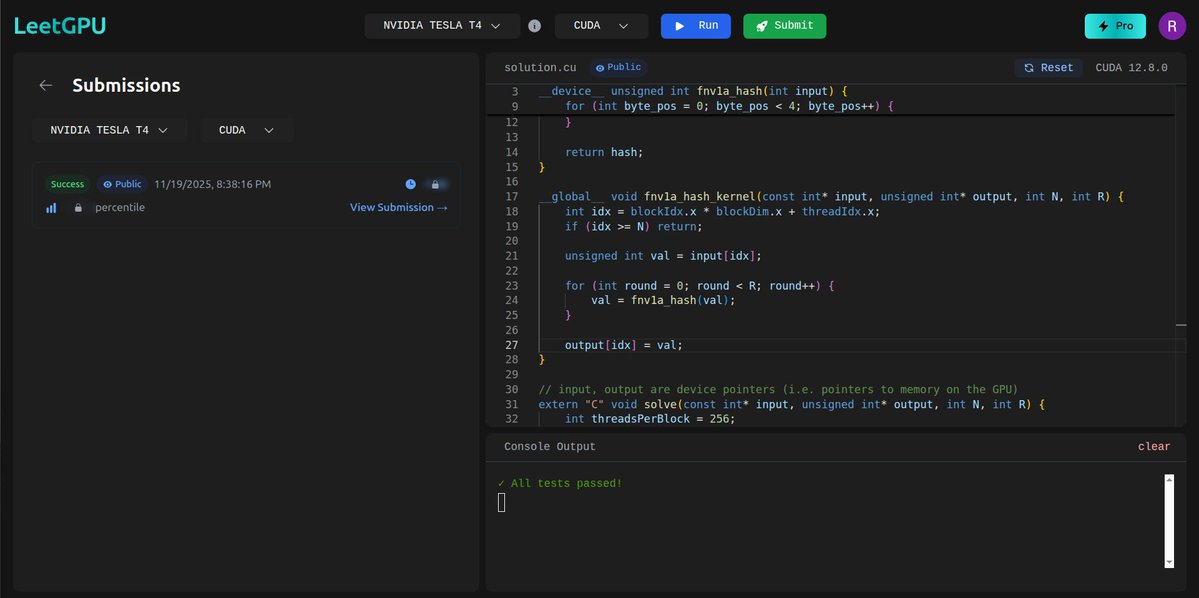

Day 10 of GPU Programming - Rainbow Table - 4th consecutive easy problem on LeetCode #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney pic.x.com/FYOUlLsQy3

Day 6 of GPU Programming -matrix addition. -todays potd was on harder side. #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney pic.x.com/9qrJliv3Fdhttp…

"Need better CUDA textbooks. 'Programming Massively Parallel Processors' is a good intro. I've created C/CUDA C implementations for first 3 chapters. Check book & my GitHub repo for details. #CUDA #GPUprogramming"

Each common operation is implemented as its own .cu file—modular. intriguing. #CUDA #NVIDIA #GPUProgramming #libcudf

Day 2 of #GPUProgramming: >read an article about shared memory >learnt about registers……..Global memory >almost blacked out from elaboration of L1 & L2 iykyk >”repetition”to digest what I just learnt for about 900k milliseconds

Day 9 of GPU Programming -Leaky ReLU -leetcode potd easy but hard to implement #100DaysOfGPU #CUDA #GPUProgramming #ParallelComputing #AI #DeepLearning #100DaysOfCode #MachineLearning #NVIDIA #CodingJourney

#GPUProgramming - Day 07: 🔧 #CPU Hazards 101 🚧: Ever heard of #Register Renaming & Out-of-Order Execution? They tackle structural hazards, ensuring smooth sailing for instructions. Watch out for Data Hazards (#RAW, #WAR, #WAW) in #MIPS, but fear not! #COA #LearnInPublic

#GPUProgramming - Day 02: 🔄 Exploring CPU architectures! #RISC, like #ARM & #Power, opts for efficiency with many registers. #CISC, exemplified by #Intel 8086, prioritizes simplicity, offering diverse, complex instructions. RISC excels in energy efficiency. #COA #LearnInPublic

#GPUProgramming - Day 03: 🧠 CPUs: Processors adapt with DISA. #CPU's core duo - Control Unit & Datapath. Datapath: Registers, ALU, Buses, Multiplexers – a data symphony! 🔄 Follow the Instruction Execution Cycle: Fetch ➡️Decode➡️Execute➡️Store➡️ Update PC. 🕹️ #LearnInPublic

#GPUProgramming - Day 01: 🚀 Exploring RISC architecture: Simplified, optimized instructions in one clock cycle. 🔄 Bye, CISC complexity! 🏎️ Registers rule, boosting speed. 🤖💡 Compiler-friendly design, slick pipelining for simultaneous processing! 🕵️♂️ #COA #RISC #LearnInPublic

#GPUProgramming - Day 08: 🚀 Explored #computerarchitecture today! 🖥️ Control Hazards tackle branch prediction, #Pentium FDIV bug a classic example. 💡 Memory #Hierarchy is key—#RAM, #cache levels (L1, L2, L3), and storage devices play crucial roles. 🔄🌐 #Memory #LearnInPublic

NSIGHT GRAPHICS TUTORIAL: amzn.to/2Qffvpl | Vulkan, OpenGL, Direct 3D profiling and debugging | #graphicsprogramming #gpuprogramming #gpgpu #howtoprogram #howtocode #computerprogramming #howtowriteaprogram #siliconvalley #nsightgraphicstutorial #videowalkthrough

Something went wrong.

Something went wrong.

United States Trends

- 1. FIFA 167K posts

- 2. FINALLY DID IT 426K posts

- 3. The Jupiter 96.5K posts

- 4. The WET 107K posts

- 5. Infantino 36.3K posts

- 6. Lauryn Hill 9,757 posts

- 7. Warner Bros 189K posts

- 8. Matt Campbell 7,424 posts

- 9. Kevin Hart 4,804 posts

- 10. The BONK 241K posts

- 11. $MAYHEM 2,802 posts

- 12. Iowa State 6,757 posts

- 13. #NXXT_AI_Energy N/A

- 14. Hep B 11.1K posts

- 15. #FanCashDropPromotion 2,828 posts

- 16. HBO Max 18.8K posts

- 17. Rio Ferdinand 2,352 posts

- 18. Ted Sarandos 7,765 posts

- 19. Chris Henry Jr 11K posts

- 20. #GenerationsShift_NXXT N/A